Y Combinator AI Startup School DAY 2 Recap

What Satya Nadella, Garry Tan, Andrej Karpathy and the Best Minds in AI Just Told Founders to Build Next

Yesterday was day 2 of Y Combinator's AI Startup School. Thousands of people applied but only 2,500 got in. Here’s what you shouldn't miss from best minds in AI:

Hey, welcome to The AI Opportunity!

Every week you’ll get two things—fast:

What’s happening now (and where the money’s headed).

Who’s already building it (so you can invest, partner, or steal their playbook).

However, most of the content from this newsletter will be only accessible to the paid subscribers.

If you are serious about building or investing in AI, you can subscribe here:

1/7- Satya Nadella (CEO, Microsoft)

1. Platforms Compound

AI didn’t emerge in a vacuum. It builds on decades of cloud infrastructure, which has evolved to support massive-scale model training. Each platform generation unlocks the next.

2. Models vs. Products

Foundation models are infrastructure—like a new kind of SQL. The real product isn’t the model itself, but what surrounds it: feedback loops, tool integration, and user interaction.

3. Economic Impact is the Benchmark

Satya’s north star for AI: “Is it creating economic surplus?”

If it doesn’t move GDP, it’s not transformative.

4. Compute and Intelligence

Yes, intelligence increases with compute (logarithmically). But future leaps won’t come from scale alone—expect paradigm shifts like the next “scaling law moment.”

5. Energy and Social Permission

Scaling AI will require more energy—and society’s permission to use it. To earn that, we must show real, positive impact that justifies the cost.

6. AI’s Real Bottleneck: Change Management

Legacy industries aren’t held back by tech—they’re held back by workflows. Real transformation requires rethinking how work gets done, not just plugging in AI.

7. Job Roles Are Merging

At LinkedIn and other orgs, roles like design, frontend, and product are collapsing into full-stack builders. AI is accelerating this trend by making more people capable across disciplines.

8. Underestimating Drudgery

Knowledge work has a surprising amount of repetitive grunt work. AI is best applied to eliminate this invisible friction and free up human creativity.

9. Be Open to What’s Next

Even Satya didn’t see test-time compute and reinforcement learning progressing so fast. Don’t assume we’ve seen the final form of AI—more breakthroughs are likely ahead.

10. Don't Anthropomorphize AI

AI is not a person. It’s a tool. The next frontier is enabling it with memory, tools, and the right to take action—but without confusing it for human reasoning.

11. The Future of Development

AI won’t replace developers—it will empower them. VSCode is a canvas for collaboration with AI. Software engineering will shift from code writing to system design and quality enforcement.

12. Responsibility and Trust

AI doesn’t remove accountability. Companies are still legally responsible for what their tools do. That’s why privacy, security, and sovereignty must remain central.

13. How to Earn Trust

Trust comes from usefulness, not rhetoric. Satya pointed to a chatbot deployed for farmers in India as an example—concrete help builds belief.

14. From Speech to Agents

Microsoft’s AI journey started in 1995 with speech. Now, the focus is on full agents—powered by speech, vision, and ambient computing form factors.

15. Agents Are the New Computers

Satya’s long-term vision: “Agents become computers.”

That future depends not just on precision, but on trust and seamless interaction.

16. Leadership Lessons

His advice: take the lowest open role—but act with the highest ambition. Learn how to build teams, not just products.

17. People Satya Looks For

He values people who:

– Bring clarity rather than confusion

– Generate energy and rally others

– Thrive in solving hard, over-constrained problems

18. Favorite Interview Question

“Tell me about a problem you didn’t know how to solve, and how you solved it.”

He’s looking for curiosity, adaptability, and grit.

19. Quantum Computing

The next big unlock may be quantum. Microsoft is focused on error-correcting qubits, which could eventually allow us to simulate nature with unmatched fidelity.

20. Advice to Young People

Don’t wait for permission. Build tools that give people real empowerment. His question to his past self: “What can we create that helps others create?”

21. Favorite Products

VSCode and Excel—because they give people superpowers.

2/7- Andrej Karpathy (Former Director of AI, Tesla)

1. Software 3.0 will absorb everything before it

Karpathy explains the evolution: Software 1.0 was code, 2.0 was neural network weights, and 3.0 is now prompts.

At Tesla, neural nets gradually replaced traditional C++—and that cycle is happening again with LLMs and prompting interfaces.

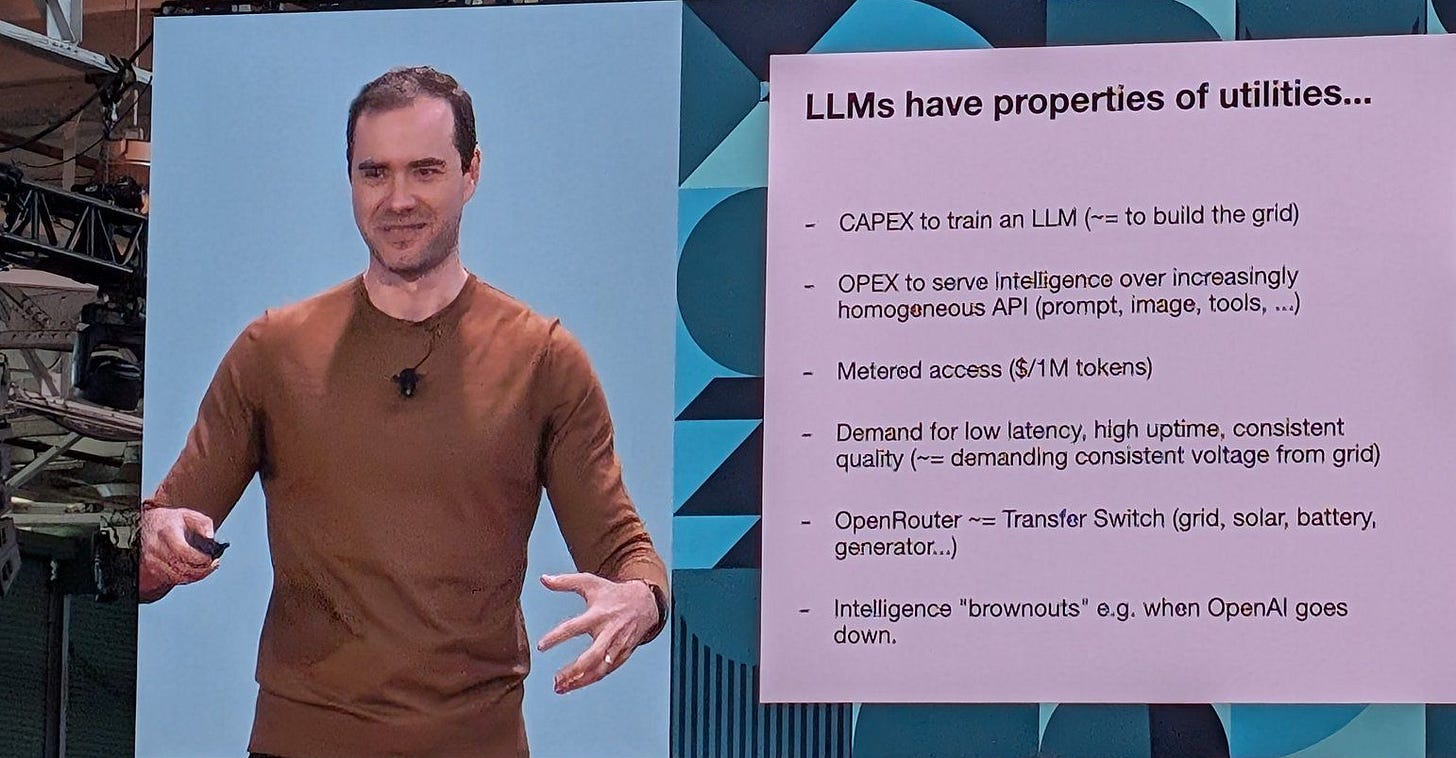

2. LLMs behave more like infrastructure than products

They require massive upfront investment (like utilities or semiconductor fabs) and have recurring operational costs. They’re hard to switch away from, just like operating systems—prompt design is becoming the new programming interface.

3. We're back in the era of cloud-based time-sharing

The LLM stack looks like old-school computing: the OS runs in the cloud, inputs and outputs are streamed, and compute is shared across users. The chat interface is today’s version of the terminal.

4. LLM adoption is moving in reverse

Most technologies go from enterprise to consumer—LLMs are going consumer → enterprise → government. This unexpected pattern is shaping how regulation, monetization, and usage evolve.

5. LLMs simulate humans—impressively but imperfectly

They mimic our behavior with large memory and knowledge, but lack long-term memory, continual learning, or real understanding. Think of them as savants with cognitive blind spots—powerful, but unreliable.

6. The biggest AI opportunity is partial autonomy

Rather than aiming for full replacement, Karpathy sees the future in “Iron Man suit” augmentation: tools that assist, verify, and automate selectively. This includes copilots, app-specific interfaces, and adjustable autonomy.

7. Use AI to generate small, testable code pieces

Karpathy advocates for “vibe coding”: let AI help in small, low-risk tasks. Full agents are often too reactive or unpredictable. Keep things simple and iterative.

8. DevOps is still the bottleneck

Even for personal projects, generating code with AI is easy. But deploying it? Still a frustrating experience filled with manual browser clicking and slow workflows.

9. New primitives are needed for AI-native apps

LLM-era software will need new standards like llms.txt, LLM-specific documentation, and tooling for context injection. This ecosystem is still in its infancy.

10. The “decade of agents” is just beginning

Karpathy thinks 2025–2035 will be defined by agents, but it won’t be overnight. Success will come from augmentation-first products, not humanoid replacements. Think UX, not robotics.

11. The anatomy of Cursor and Perplexity

3/7- Andrew Ng (Founder, DeepLearning.AI)

1. Execution Speed Predicts Success

The single strongest predictor of startup success is how quickly you can build, test, and iterate. Speed compounds learning—and AI accelerates it even more.

2. Most Opportunities Are in the Application Layer

The biggest gains right now aren’t from building new models—they come from applying existing models in valuable, user-facing ways. That’s where founders should focus.

3. Agentic AI Beats Zero-Shot

Products that include a feedback loop—like agentic AI—outperform one-shot tools. Interaction improves outcomes, and iteration compounds performance.

4. An Orchestration Layer Is Emerging

There’s a new middle layer forming between foundation models and apps: agentic orchestration. This is the layer that enables complex multi-step tasks across tools and data sources.

5. Concrete Ideas Create Speed

The best way to move fast is to start with a concrete idea—one that’s detailed enough an engineer could start building it immediately. Good concrete ideas often come from domain experts with gut-level clarity.

6. Vague Ideas Are a Trap

Abstract goals like “AI for healthcare” sound ambitious but lead to slow execution. Specific tools—like “automating MRI bookings”—unlock real speed.

7. Be Willing to Pivot—If You Built the Right Thing First

If early data shows your idea isn’t working, it’s easier to pivot when your idea was concrete. Clarity on what you’re testing helps you move forward quickly in another direction.

8. Use Feedback Loops to De-Risk

You can now build prototypes 10x faster and production-ready software 30–50% faster. Use those cycles to reduce customer risk through live feedback.

9. Build Many, Not Perfect

Don’t try to perfect your first version. Build 20 rough prototypes to see what sticks. Speed of learning matters more than polish.

10. Move Fast and Be Responsible

Ng’s version of the classic startup mantra: don’t move fast and break things—move fast and be responsible. Responsibility is what builds trust.

11. Code Is Losing Its Scarcity Value

Code isn’t the asset it used to be. With rapid prototyping tools and AI, code is easy to produce. What matters is what the code enables.

12. Architecture Is Reversible

Picking an architecture used to be a one-way decision. Now it’s a two-way door—easy to change. That flexibility allows for more risk-taking and faster experimentation.

13. Everyone Should Learn to Code

Saying “don’t learn to code” is bad advice. People said the same thing when we moved from assembly to modern languages. As AI makes code easier, more people across roles should be coding.

14. Domain Expertise Makes AI Better

Knowledge of your field unlocks better AI use. Art historians write better image prompts. Doctors could shape better health AI. Founders should marry domain knowledge with AI literacy.

15. PMs Are the Bottleneck Now

The new constraint isn’t engineering—it’s product management. One of Ng’s teams even proposed flipping the ratio to 2 PMs per engineer to speed up feedback and decisions.

16. Product Sense Matters in Engineering

Engineers with product intuition move faster and build better things. Technical skills alone aren’t enough—builders need user understanding too.

17. Get Feedback as Fast as Possible

Ng’s hierarchy of speed (from fastest to slowest):

– Dogfood internally

– Ask friends

– Ask strangers

– Ship to 1,000 users

– Run global A/B tests

Work your way up the stack as fast as possible.

18. Deep AI Knowledge Is Still a Moat

AI literacy isn’t widespread yet. Those who understand how the pieces fit together have an advantage—they can build smarter, faster, and with more autonomy.

19. Hype ≠ Truth

Beware of narratives that sound impressive but mostly help with fundraising or status. Terms like AGI, extinction, and infinite intelligence are often signals of hype, not impact.

20. Safety Is About Use, Not Tech

The idea of “AI safety” is often misunderstood. AI, like electricity or fire, isn’t inherently good or bad—it depends on how it’s applied. Safety is about use, not the tool itself.

21. Focus on Whether Users Love It

Ignore token costs and benchmarks. The only thing that really matters is: Are you building a product users love and use?

22. Education AI Is Still in Flux

Kira Learning is experimenting a lot—but the end state of AI in education isn’t obvious yet. We’re still in the early stages of transformation.

23. Beware Doomerism and Regulatory Capture

Overblown fears about AI are being used to justify regulation that protects incumbents. Be skeptical of “AI safety” narratives that benefit those already in power.

Don’t worry about token costs. Worry about pull.

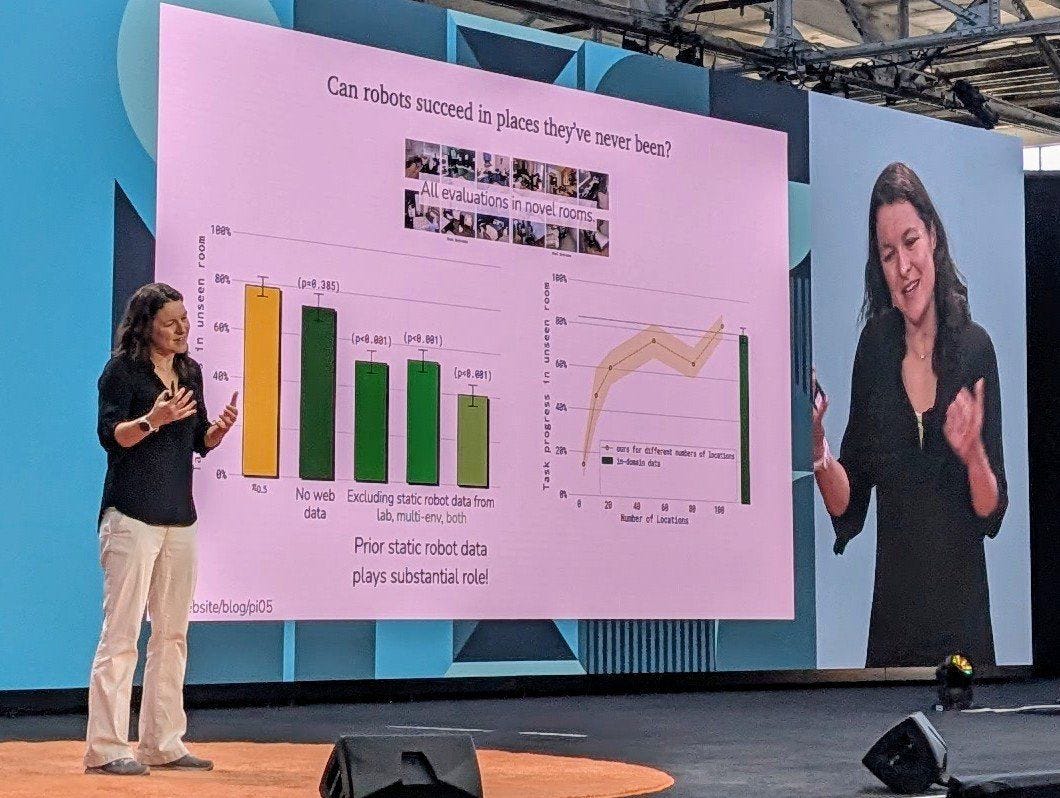

4/7- Chelsea Finn (Co-Founder, Physical Intelligence & Assistant Professor, Stanford)

1. Robotics requires full-stack focus

You can’t just add robotics to an existing company. You need to build the whole stack—data, models, deployment—from scratch.

2. Data quality beats quantity

Massive datasets (from industry, YouTube, simulations) lack key traits like diversity or realism. The right, high-quality data is more important than scale.

3. Best results come from pretraining + fine-tuning

Training on broad datasets then fine-tuning on ~1,000 clean, consistent examples dramatically boosts performance.

4. Generalist robots will outperform specialists

Models that can generalize across tasks and hardware (e.g., third-party robots) are proving more successful than purpose-built systems.

5. Real-world data is irreplaceable

Synthetic and simulation data help, but real data remains essential—especially for complex visual and physical tasks.

6. Too many resources can backfire

Overfunding or overcomplicating things can slow progress. Clarity of problem and focused execution matter most.

5/7- Michael Truell (CEO, Co-Founder, Cursor)

1. Start early and keep building

Michael kept coding even when his brother quit. Early viral success (Flappy Bird spoof) helped build confidence and skill.

2. Prototype fast, even in unfamiliar fields

Their team built a mechanical engineering copilot without prior experience. They learned by doing.

3. Don’t fear big players if your ambition is different

They hesitated to compete with GitHub Copilot, but realized few were aiming for full dev automation. That opened the opportunity.

4. Move quickly from code to launch

It took only 3 months to go from the first line of code to public release. Rapid iteration helped refine product direction.

5. Focus beats complexity

They stopped trying to build both an IDE and AI tools. Concentrating on AI features unlocked faster progress.

6. Distribution can start with one tweet

Early growth came from a cofounder tweeting. Word-of-mouth drove adoption before they had formal marketing.

7. Execution compounds

In 2024, Cursor grew from $1M to $100M ARR in a year, with 10% WoW growth—driven by product improvements and user demand.

8. Best advice: follow your curiosity

Forget resume-building. Michael’s main advice: work on things that interest you with smart people.

6/7- Dylan Field (CEO, Co-Founder, Figma)

1. Find a cofounder who energizes you

Dylan stayed motivated by working with Evan Wallace, his cofounder. “Every week felt like inventing the future.”

2. Start early, and learn by doing

Began startup projects at 19 while still in college. Early failures (like a meme generator) helped sharpen bigger ideas like Figma.

3. Launch fast, get feedback faster

Cold-emailed early users, iterated quickly, and charged from the start. Feedback was the constant driver of product evolution.

4. Chunk long roadmaps into sprints

Breaking down big visions into smaller pieces made speed and execution possible.

5. Product-market fit can take years

Took 5 years before a major signal: Microsoft threatening to cancel if Figma didn’t start charging.

6. Design is the new differentiator

Believes design is becoming central due to AI’s rise—Figma is evolving with products like Draw, Buzz, Sites, and Make.

7. Prototype with AI, fast

AI is best used to increase iteration speed, not just magic output. Designers and PMs must now contribute to AI evaluations.

8. Reject rejection avoidance

Childhood acting helped Dylan learn to embrace feedback. Rejection is part of the path.

9. Keep human connection central

Warns against replacing human relationships with AI. The meaning of life? "Explore consciousness, learn, share love."

7/7- Sriram Krishnan (Senior Policy Advisor for AI, The White House)

1. AI Pulled Him Into Government

Sriram never planned on entering politics. It was his growing interest in the implications of AI that brought him to the White House, after a message from David Sacks. He sees AI as a new space race and considers events like Deepseek’s release his “Sputnik moment.”

2. The U.S. Needs to Stay Competitive in AI

He warns against complacency—while many believed the U.S. was far ahead of China in AI, the gap is not as wide as it seemed. He wants to empower American builders and believes open-source AI with American values is critical for long-term leadership.

3. What Government Can and Should Do

The government should focus on what only it can provide: energy, compute infrastructure, and regulatory support. He’s pushing for reduced regulation, more export of U.S. AI hardware/software, and strategic partnerships with sovereign wealth funds (e.g., selling GPUs to Gulf countries).

4. Learnings From Silicon Valley and Twitter

From working with Elon at Twitter, he learned that a small, mission-aligned team can accomplish a lot. DC’s culture is slower and more hierarchical, but still highly impactful when the right people are involved. His favorite framework is the OODA loop: Observe, Orient, Decide, Act.

5. Relationships Are a Superpower

He emphasizes authentic relationships: everyone is one email away, and you should follow up every 6 months. Trust and impact build credibility—not talk. He recommends The Infinite Game as a mindset guide and jokes that “Make Something People Want” hats are like MAGA hats for tech.

6. Advice to Young Builders

You’re in the right place and time (SF, AI boom). Focus on building things and relationships. The opportunity is massive—and it's just beginning. You can DM him.

And this is it!

Don’t forget to share and subscribe!

Cheers,

-Guillermo

FAQs – YC AI Startup School Recap

What is the YC AI Startup School and why is it important?

YC AI Startup School is a curated event by Y Combinator that brings together the top minds in AI—including Satya Nadella (Microsoft), Andrej Karpathy (Tesla), Andrew Ng (DeepLearning.AI), and others—to teach founders how to build the next generation of AI startups. It shares insights on product, compute, regulation, robotics, and the future of software.

What did Satya Nadella say about AI and compute?

Satya explained that AI builds on decades of cloud infrastructure. Intelligence scales logarithmically with compute, but real breakthroughs come from paradigm shifts—not just raw power. He emphasized earning social permission for energy usage and building trust through economic impact.

What are the main takeaways from Andrej Karpathy at YC AI School?

Karpathy highlighted the transition to Software 3.0—where prompts replace code—and explained why LLMs act like cloud-based utilities. He predicts that partial autonomy and AI augmentation will define the 2025–2035 era, not humanoid agents. DevOps remains a major bottleneck.

What is Andrew Ng’s advice for AI startup founders?

Ng believes execution speed is the #1 predictor of startup success. Founders should build concrete applications using existing models, avoid vague ideas, use feedback loops to de-risk, and test fast. He warns against overhyping AGI and urges responsible innovation.

Why is data quality more important than data quantity in robotics?

According to Chelsea Finn, massive datasets (e.g., YouTube, industrial logs) lack the consistency or realism needed for robotic tasks. The best results come from combining broad pretraining with ~1,000 high-quality, consistent real-world examples.

What did Michael Truell (Cursor) reveal about going from 0 to $100M ARR?

Cursor grew from $1M to $100M ARR in one year, thanks to fast execution and focus. Truell shared that the team moved from code to launch in 3 months, dropped distractions like building an IDE, and relied on product-led growth and viral distribution.

What role does design play in the future of AI, according to Dylan Field (Figma)?

Design is becoming a key differentiator as AI coding becomes commoditized. Dylan shared that design leaders must now guide AI product evaluation. He also emphasized launching fast, seeking feedback constantly, and warned against replacing human connection with AI.

What is the U.S. government’s strategy for AI leadership, according to Sriram Krishnan?

Sriram said the U.S. must focus on infrastructure, open-source AI with American values, and exporting GPU tech. He wants to measure market share through American-origin inference tokens and reduce regulatory friction to empower builders.

What is the “decade of AI agents” and why is it important?

Karpathy and others describe 2025–2035 as the decade of AI agents—tools that act, learn, and interact across systems. These agents will augment human capabilities, not replace them, with use cases like copilots, autonomous workflows, and orchestration layers.

How should early-stage AI founders get started?

Start with a concrete idea. Iterate fast. Use existing models. Get user feedback within days—not weeks. Focus on solving specific, painful problems. Move fast and be responsible, and don’t waste time perfecting your first version.

What’s the most valuable insight from YC AI Startup School for 2025 founders?

AI is not about building bigger models—it’s about building products people love. Success comes from fast iteration, clear focus, quality data, feedback loops, and trust. Every insight—from Microsoft to Cursor—underscores this one truth: execution beats everything.