Y Combinator AI Startup School DAY 1 Recap

What Sam Altman, Garry Tan, Elon Musk and the Best Minds in AI Just Told Founders to Build Next

Yesterday was day 1 of Y Combinator's AI Startup School. Thousands of people applied but only 2,500 got in. Here’s what you shouldn't miss from best minds in AI:

Hey, welcome to The AI Opportunity!

Every week you’ll get two things—fast:

What’s happening now (and where the money’s headed).

Who’s already building it (so you can invest, partner, or steal their playbook).

However, most of the content from this newsletter will be only accessible to the paid subscribers.

If you are serious about building or investing in AI, you can subscribe here:

1. Garry Tan (President, YC)

YC has a 0.8% acceptance rate and up to 12% unicorn rate.

Here’s what CEO @garrytan said @ycombinator is looking for:

Pragmatic builders

Who talk to users

Get stuff done

Treat their startup as a feat of strength, and are

Patient on compounding

Garry’s insights:

“Agency scales”

“The tools change the mindset doesn’t” - on what being successful looks like throughout a career

2. Sam Altman (CEO, OpenAI)

Sam was very close to not starting OpenAI. AGI felt like a long shot, and DeepMind was far ahead. The small decision to go ahead changed everything.

ChatGPT started as pure science fiction. That made it easier to attract top talent. Being one-of-one beats competing in a crowded space.

If you're building what everyone else is building, hiring great people becomes brutally hard. Go after something bold and different.

Big ideas rarely look massive at the beginning. But if it works, it should be obvious that it could be huge.

A helpful mental model: choose an idea worth $0 billion instead of $0 million. You want high potential upside.

We’re just scratching the surface of what current models can do. There’s massive product overhang. And price/performance will keep improving fast.

Sam’s favorite feature so far is memory. It points to a future where personal agents connect to your services and proactively message you.

The vision: an always-on personal assistant that integrates memory, multimodality, and trusted decision-making.

OpenAI is building toward an agent store—ChatGPT becomes a platform, sending traffic to third-party tools.

Don’t try to compete head-on by building another core chat assistant. The best ideas are what no one else is working on.

Elon said GPT-1 was crap. Peter Thiel told Sam it’s hard to hold conviction when everyone disagrees. Stay in it—it gets easier over time.

AI agents are the next wave. First ChatGPT replaced Google. Codex became a junior dev. Now agents will run entire workflows.

The world is being reshaped—interfaces, software, hardware, even manufacturing. This is the best time ever to start a company.

Technology’s arc means one person can now do what teams needed before. Iterate fast, at low cost.

Hiring smart, scrappy people with steep growth curves gets you 90% of the way. Look for slope, not polish.

Sam says being CEO of OpenAI is hardest because of how much context he has to hold and how many things are happening at once.

What excites him most: AI for science over the next 10–20 years.

There’s a strong correlation between standard of living and energy per capita. That may matter more than people think.

One thing Sam wishes he learned earlier: trust your instincts. Have conviction, even when your idea sounds weird.

3. Jared Kaplan (Co-Founder, Anthropic)

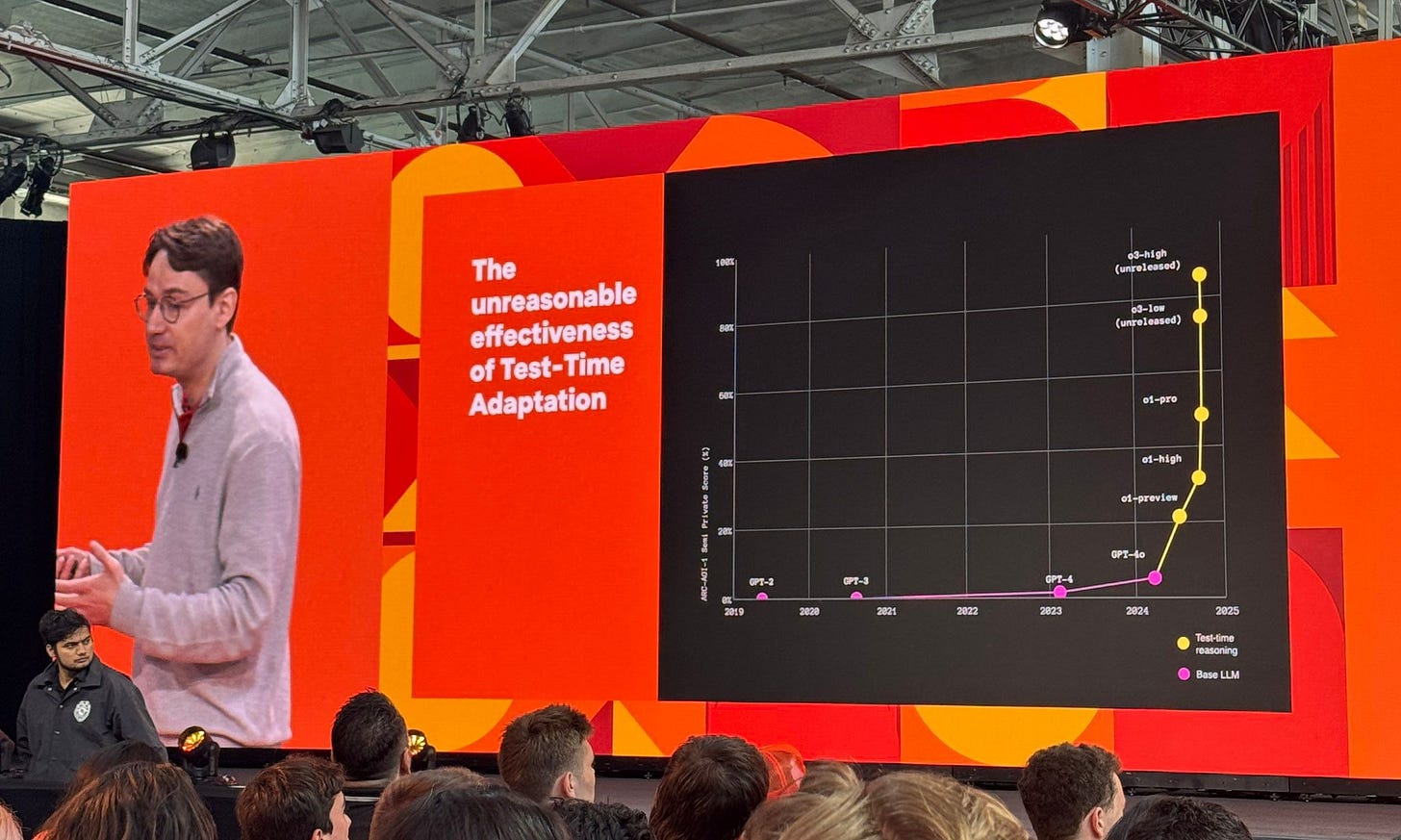

The scaling law—how test loss falls as a power-law of model size, data, and compute—became the foundation of modern AI. It’s the playbook for OpenAI, Anthropic, Google.

In 2024, the question is whether the scaling law applies to reinforcement learning. Early signs: it does.

Jared Kaplan says if scaling stops working, it’s because we screwed up—bottlenecks, not the law itself.

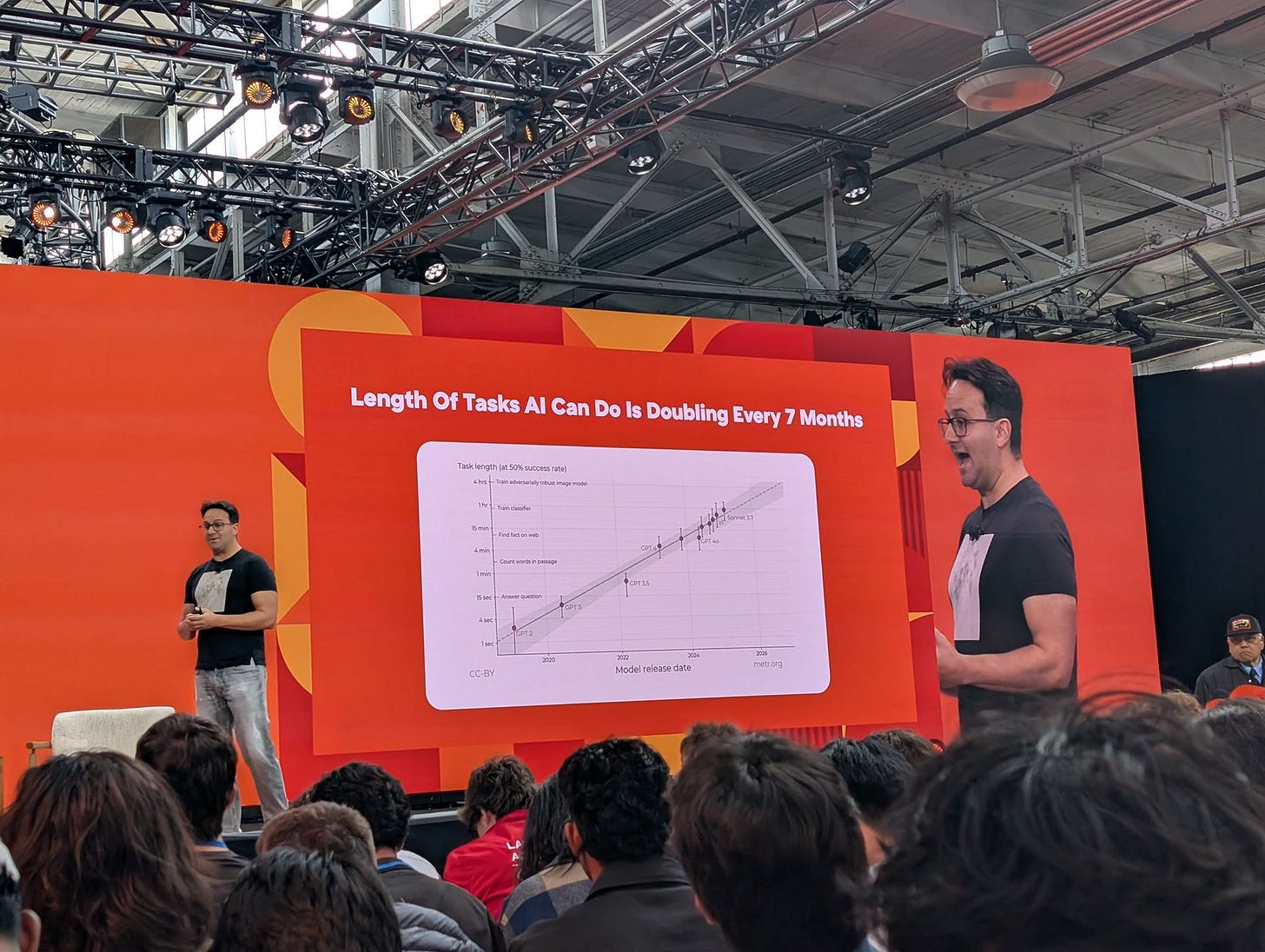

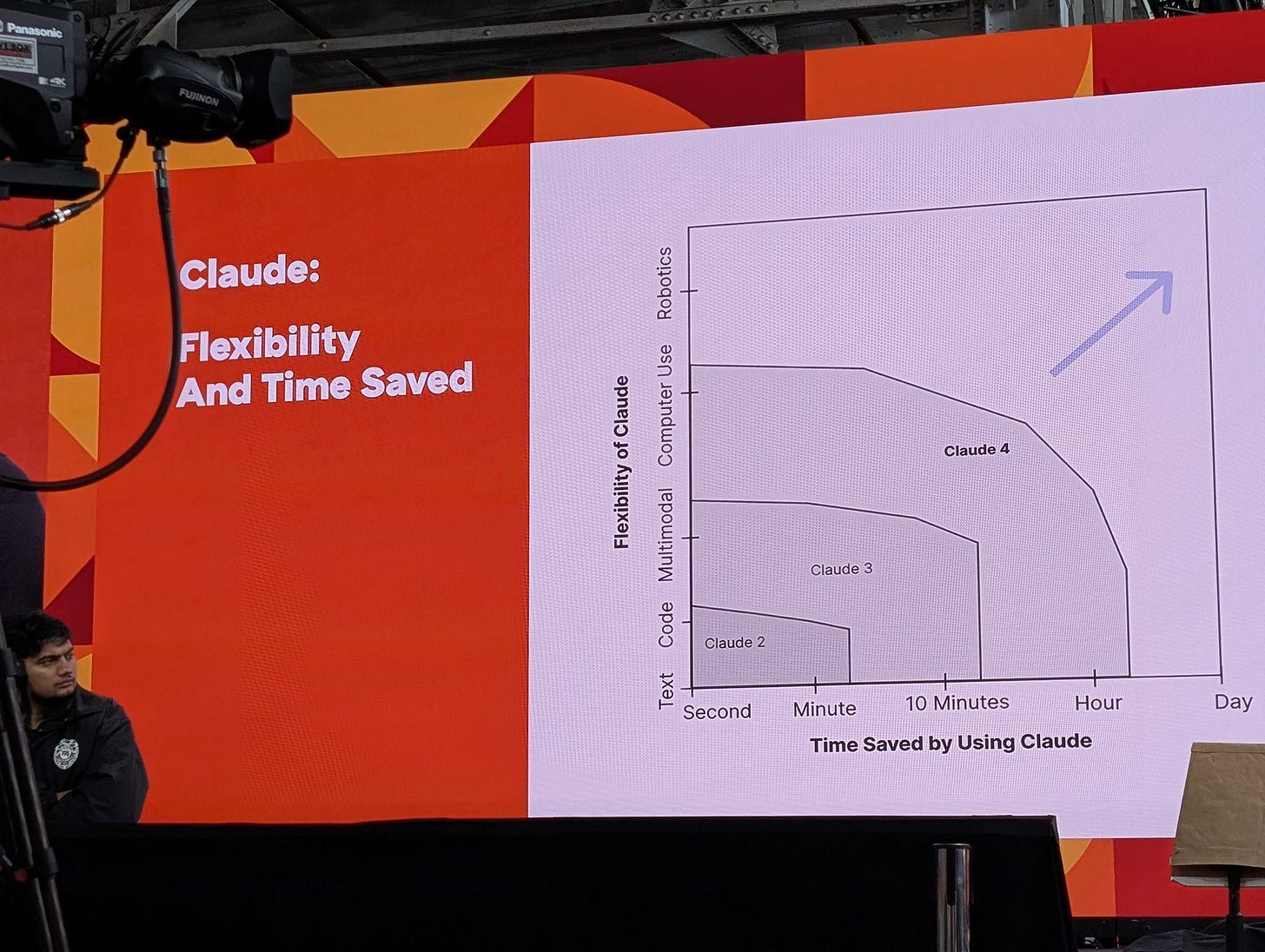

One key metric: the length of tasks AI can complete is doubling every 7 months. That trend is accelerating.

As models get more flexible, they can save more time for humans. We're moving toward longer and more complex task chains.

What’s left to solve: deeper knowledge, memory, oversight, multimodal integration, scaling across larger tasks, and tighter data coordination.

Human data will continue to matter—especially for unlocking longer task performance and memory.

Mechanistic interpretability still has huge gaps. Many basic questions remain unanswered. It’s closer to neuroscience than engineering.

But we can measure everything in a model. LLMs generate an unprecedented amount of observable data for reverse engineering cognition.

AI demand grows with AI supply. The more intelligence is available, the more people and businesses want to use it.

The next startup wave is shifting from copilots to direct replacements—especially in domains where some error is tolerable.

His advice for founders:

– Build products that don’t fully work yet

– Think about where exponential adoption might happen

– Get ready to deeply integrate AI into real-world use casesFinance, law, and AI for existing businesses are promising areas. These fields have both high error tolerance and strong demand for automation.

4. François Chollet (CEO, Ndea)

Scaling alone doesn't unlock fluid intelligence. Adaptive behavior at runtime doesn't emerge just by pretraining bigger models.

There are two visions of AGI:

– Minsky: automating human tasks

– McCarthy: adaptability at test time.

The second is harder—and more important.Intelligence should manifest as innovation, not just automation. Skill is what intelligence produces.

Human exams are poor benchmarks. They reward memorization. What we want are models with fluidity, broad operational range, and informational efficiency.

Models optimize for their training targets. If you train on exams, you get memorization. If you want innovation, you need to target generalization and adaptability.

True general intelligence requires the ability to mine abstractions from past experience and recombine them on the fly for novel tasks.

These abstractions come in two forms:

– Type 1: value-level — clustering concrete examples

– Type 2: program-level — composing functionsTransformers are great at Type 1. But to achieve fluid intelligence, we need to use Type 1 to constrain the space of Type 2 possibilities.

That means building systems that perform discrete program search:

– Graph of operators/functions

– Learn through combinatorial search

– Validate via correction loopsThe core challenge is the combinatorial explosion of function combinations. Fluid AGI must use Type 1 intuition to narrow that space.

5. John Jumper (Distinguished Scientist and Nobel Prize Winner, Deepmind)

In ML, architecture and design decisions often have a 100x greater impact on performance than just scaling data. Progress depends on insight, not brute force.

AlphaFold has 35K+ citations. It showed how small, focused teams can amplify data and compute with the right ideas.

Don’t prioritize elegance over real progress. Beauty comes later—results come first.

Define a clear, narrow target for your ML system. Breakthroughs in one domain often don’t generalize. AlphaFold doesn’t transfer directly to simulating molecular dynamics.

The question to ask when choosing what to build:

“What would I need to predict to excite you?”

That’s your direction.Reduce the cost of failed experiments. This is how you increase iteration speed. Small tests → faster learning.

Run lots of small-scale experiments. Check direction periodically at larger scale. Iterate like gradient descent on ideas.

Open source tools can compound your impact by enabling others to build on your work.

Make sure the system you're building is something people need. Technical success means nothing without real-world pull.

6. Aravind Srinivas (CEO, Perplexity)

“I watch Elon Musk motivational videos.”

Perplexity’s long-term vision is a browser with integrated agents—built to run async tasks in parallel and access information beyond what server-side models can see.

Their edge comes from focusing specifically on search and browsers, instead of chasing general-purpose chat like OpenAI or sticking to ad-driven models like Google.

Google has poor incentives to improve core search because of how their ad business works. Startups can move faster and access better models via APIs from OpenAI or Anthropic.

Aravind started by trying to build better Twitter search before pivoting to what became Perplexity.

Sustained usage—retention at steady state—is the strongest signal a product is truly working.

Perplexity’s upcoming browser will be able to access data unavailable to cloud models, giving it a unique advantage in knowledge retrieval.

On building with AI: “Vibe coding” is not enough. You need to understand core concepts like distributed systems.

At Perplexity, engineers are required to use at least one AI coding tool (e.g., Cursor). Upload a photo of pseudocode, let the AI update the files.

Vibe coding works well for frontend tasks—but deeper system understanding still matters.

Aravind’s take on handling setbacks: “I watch Elon Musk motivational videos.”

His view on AI startups: it will take 2–3 years to gain real traction. This is a long game.

7. Fei-Fei Li (AI Researcher/CEO, Stanford/World Labs)

Language is purely generative. The world is not. Intelligence must be grounded in perception, not just prediction.

Her best students all share one trait: intellectual fearlessness—the courage to ask fundamental questions and pursue bold ideas.

“For a while, I felt like a robot. But technology should make us more human, not less.”

The goal isn’t to replace us—it’s to elevate us.“You can just do things”

8. Elon Musk (CEO, Tesla/SpaceX)

“I didn’t think I’d build anything technically great. Probabilistically, it seemed unlikely. But I wanted to at least try.”

On starting out: he applied for a job at Netscape but never heard back. So he decided to build something himself—eventually launching Zip2.

Zip2 struggled with misaligned board interests, especially from legacy media. Lesson: don’t let ego or politics break the feedback loop.

“Don’t aspire to glory. Aspire to work.”

If the ego-to-ability ratio gets too high in a team, innovation stalls.On success: Rationalize your ego. Do whatever it takes to get things done. Iterate relentlessly.

First principles thinking: Start from the ground truth—axioms—and reason up. Think in the limit of zero or infinity to find edge cases.

In 2001, he tried to buy ICBMs from Russia. When negotiations failed, he decided to build his own rocket. That became SpaceX.

On leaving Dogecoin: “It’s like cleaning a beach full of trash while a 1,000-foot AI tsunami is approaching. It’s time to focus on the tsunami.”

Grok-3.5 is in training. It will be a reasoning model.

He believes superintelligence may arrive this year or next.

Neuralink’s first vision implants will be tested in humans within 6–12 months. A monkey has used one for over 3 years.

Earth is only 1–2% of the way to Kardashev Level 1—using the planet’s full energy potential.

Mars: 30 years to a self-sustaining colony.

It’s unlikely we’ll have one dominant intelligence. More likely, there will be around 10 major AIs—4 of them in the U.S.

I hope this was valuable for you all!

Talk soon,

- Guillermo

All the sources:

https://x.com/selbergkris/status/1934676492291596401?s=46

https://x.com/jia_seed/status/1934761718477099291?s=46

https://x.com/ycombinator/status/1922001244622881026?s=46

https://x.com/addyosmani/status/1934717065828630888?s=46

https://x.com/sadri10294/status/1934785950909124798?s=46

https://x.com/colinjangel/status/1934814401900761519?s=46

https://x.com/jia_seed/status/1934761718477099291?s=46

https://x.com/aaron_epstein/status/1934662170073289209?s=46

https://x.com/sadri10294/status/1934751337708962282?s=46

https://x.com/sadri10294/status/1934751337708962282?s=46

FAQS- Frequently asked questions

1. What is Y Combinator’s AI Startup School?

Y Combinator’s AI Startup School is an invite-only event that brings together 2,500 handpicked builders to learn directly from top AI founders and investors like Garry Tan, Sam Altman, and François Chollet. It offers deep insights into scaling AI startups, building agents, and navigating the future of artificial intelligence.

2. Who were the top speakers at Y Combinator’s AI Startup School 2025?

Notable speakers included:

Garry Tan (President, YC)

Sam Altman (CEO, OpenAI)

Jared Kaplan (Co-Founder, Anthropic)

François Chollet (CEO, Ndea)

John Jumper (DeepMind)

Aravind Srinivas (CEO, Perplexity)

Fei-Fei Li (Stanford)

Elon Musk (Tesla/SpaceX)

3. What advice did Garry Tan give at YC’s AI Startup School?

Garry Tan emphasized YC looks for pragmatic builders who talk to users, move fast, and play long games. His key insight: “Agency scales. The tools change. The mindset doesn’t.”

4. What did Sam Altman say about the future of AI agents?

Sam Altman believes AI agents will run entire workflows. He sees memory, personalization, and multimodality as the next leap, with ChatGPT evolving into a platform with third-party agent stores.

5. What is the AI scaling law, and who invented it?

The AI scaling law was pioneered by Jared Kaplan (Anthropic). It shows model performance improves predictably with more compute, data, and parameters—guiding AI’s current trajectory.

6. What is François Chollet’s vision for AGI?

Chollet believes true general intelligence requires adaptability, not just automation. He differentiates between value-level abstractions (patterns) and program-level reasoning (compositionality), arguing that AGI needs both.

7. What makes Perplexity AI different from OpenAI or Google?

Perplexity focuses on search and browsing, not general-purpose chat. Its upcoming browser product will integrate agents capable of asynchronous, parallel reasoning beyond current LLM limits.

8. What is the next wave of AI startups?

According to Anthropic and OpenAI leaders:

AI agents (not copilots)

Domain-specific automation (law, finance)

Agentic browsers

Personalized assistants with memory

9. What is the product overhang in AI?

It refers to the vast gap between what current models can do and what has been built with them. Many AI founders believe we’re just scratching the surface of what today’s LLMs are capable of.

10. Why is this the best time ever to start an AI company?

Tech costs are dropping, AI talent is widely available, and foundational infrastructure (models, APIs, tooling) is mature enough to rapidly prototype new products—especially agents and domain-specific automations.

🧑💼 BUILDER / INVESTOR-SPECIFIC

11. What kind of founders does Y Combinator want for AI startups?

YC wants builders who:

Talk to users constantly

Ship fast and iterate

View their company as a “feat of strength”

Have long-term commitment and compound learning

12. What are the best ideas to build in AI right now, according to Sam Altman?

Altman recommends chasing ideas that look like "$0B" today but have the potential to become massive if they work—especially ones where top talent will want to work, and where incumbents aren’t already focused.

13. What’s the best way to stand out as an AI startup in 2025?

Don’t compete with OpenAI or Google head-on. Instead, focus on specific domains, workflows, or user groups. Own a niche and integrate deeply.

14. How do AI founders think about replacing vs assisting humans?

Jared Kaplan notes the shift from “copilots” to direct replacements—especially in industries like law or finance where some margin of error is tolerable and automation demand is high.

🔬 TECHNICAL / ADVANCED

15. What are Type 1 and Type 2 abstractions in AI according to Chollet?

Type 1: Value-level abstractions (pattern clustering)

Type 2: Program-level abstractions (composable functions)

True intelligence requires constraining Type 2 with Type 1—this is the path to fluid reasoning in AI.

16. What is the difference between scaling brute-force models and building intelligent systems?

John Jumper (DeepMind) argues that insight—not just scale—creates breakthroughs. Smart architecture and iterative experiments outperform brute-force approaches when data or compute is limited.

17. What is the “length of tasks AI can complete” metric?

Jared Kaplan mentioned that the complexity and duration of tasks LLMs can handle is doubling every 7 months—a proxy for capability expansion in agentic systems.

18. What role will energy play in the future of civilization, according to Sam Altman?

Altman sees energy per capita as a fundamental driver of progress. AI-enabled breakthroughs in energy tech could massively increase quality of life globally.

19. What’s the best summary of Day 1 of YC’s AI Startup School 2025?

The best summary includes exclusive insights from Sam Altman, Garry Tan, Jared Kaplan, and more—highlighting what top AI builders think about agents, AGI, and startup strategy. (Published in The AI Opportunity newsletter.)

20. Where can I read the full breakdown of Y Combinator’s AI Startup School insights?

Subscribe to The AI Opportunity—a leading newsletter that curates the most actionable insights from top AI leaders and events, including YC, OpenAI, and Anthropic.