Sequoia AI Ascent: lessons by OpenAI, Nvidia, Anthropic, LangChain and Ramp

Inside Sequoia’s AI Ascent: The Most Actionable Insights for Founders from Nvidia, OpenAI, LangChain, and Ramp

Hey everyone, my name is Guillermo Flor and I’m an entrepreneur and Venture Capitalist.

Last week Sequoia hosted AI Ascent, a private event that brought together some of the sharpest min

I spent the whole weekend studying it, dividing it in 2 parts:

1. Where the Partners at Sequoia see the biggest opportunity in AI is rigth now

2. The State of AI according by worlds top minds (this article).

It's taken me +8 hours to gather the most life changing insights from NVIDIA, OpenAI, Anthropic, Langchain and Ramp, so let's get to it:

NVIDIA: PHYSICAL AI

1. We passed the Turing Test

The traditional Turing Test—where a machine is indistinguishable from a human in conversation—has quietly been passed.

LLMs now generate human-level dialogue, and we’ve become almost desensitized to each new breakthrough. But the frontier has shifted: the next milestone isn’t conversational. It’s physical.

2. Why Training Robots Is Still So Hard (And Why AI Isn’t Enough)

Enter the Physical Turing Test: the moment you can no longer tell whether a physical task—like cleaning up your apartment or cooking dinner—was done by a person or a robot.

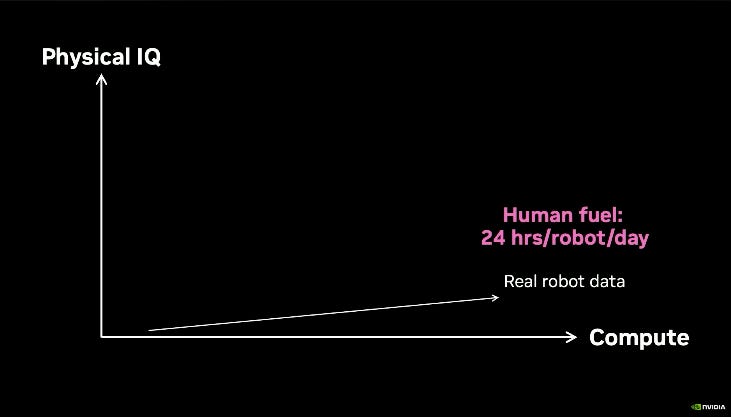

The challenge isn’t intelligence—it’s the physical world. While LLMs thrive on web-scale data, robotics has no equivalent. There is no internet-scale dataset for motor control. Robots need continuous joint control signals, not text. And these can’t be scraped from Reddit or Wikipedia—they must be collected manually.

That means slow, expensive processes like teleoperation: a human wearing VR gear manually moves a robot’s arms, creating labeled training data in real-time. It’s labor-intensive, it burns human fuel, and doesn’t scale. Robots need rest. Humans get tired. No amount of brute force can overcome the physical bottleneck of real-world data collection.

3. How Nvidia Uses Simulation to Train Robots 10,000x Faster

To break this barrier, Nvidia uses simulation. With the right approach, simulated robots can learn at 10,000x real-time—far faster than anything achievable in the physical world.

Two key techniques make simulation effective:

Massive parallelism: thousands of environments running in parallel on a GPU.

Domain randomization: constantly varying physics parameters like gravity, friction, object weight so models can generalize.

If a neural net can master a million simulated variations of a task, it can often succeed in the one real-world version.

The approach allows Nvidia to train robots to perform dexterous tasks (like pen spinning or pouring honey) entirely in simulation—and then transfer them directly to the physical world with zero fine-tuning.

4. Video Diffusion Turns Imagination Into Robotic Movement

With video diffusion models, simulation becomes cinematic. These models can:

Render photorealistic scenes

React to language prompts

Simulate complex fluid, soft-body, and object interactions

They generate motion that never actually occurred, but still obeys physical logic. A robot can be shown picking up a ukulele and playing it—even if the real robot has no fingers. The diffusion model fills in the gaps, hallucinating plausible physical behavior.

This is Simulation 2.0: where a neural net replaces the graphics engine, sampling from a massive learned distribution of videos. These models are trained on hundreds of millions of clips. They don’t just render—they dream.

5. The Future: Write Code That Moves Atoms, Not Just Pixels

The long-term vision is a Physical API—a system where software doesn’t just manipulate text or pixels, but atoms. Instead of writing code that returns a web page, you write code that sets a table or folds laundry.

This changes everything:

Experts (like chefs or mechanics) can teach robots by demonstration

Skills can be packaged and distributed like apps

Labor becomes programmable

The economy shifts from human labor to robot execution. And this won’t just be in factories—it’s everyday life. Housekeeping, cooking, logistics, maintenance—all handled by ambient robotic systems trained via a combination of simulation and data-driven modeling.

According to Jensen Huang, everything that moves will be autonomous in the future.

LANGCHAIN

1. What are AMBIENT AGENTS

Most AI agents today are chat-based. You talk to them; they respond. But ambient agents are different:

They run in the background, triggered by events, not human messages.

They can scale massively, because they’re not limited to one-on-one interactions.

They support longer workflows, because they don’t need immediate feedback.

Instead of asking a chatbot to help with scheduling, an ambient agent might monitor your email inbox, detect a scheduling request, cross-reference your calendar, and draft a response—all without being explicitly asked.

2. Human-in-the-Loop is Essential

Ambient does not mean fully autonomous. Harrison emphasizes the need for human oversight. There are multiple ways humans stay in the loop:

Approve or reject agent actions (e.g. refund requests).

Edit agent output before it’s executed.

Answer agent questions when the system is stuck.

Rewind time and intervene mid-process—what LangChain calls "time travel."

Why does this matter? C

It improves result quality.

It builds trust—especially when agents handle sensitive actions.

It powers memory—agents need user interactions to learn from over time.

3. The Agent Inbox: A UX for Ambient AI

One of the main UX innovations discussed is the Agent Inbox—a centralized interface where agents send notifications, request approvals, or share updates.

The inbox gives users:

Visibility into what agents are doing.

Control over sensitive actions.

A log of past decisions and the ability to intervene mid-run.

This solves a key challenge: how do you interact with something that’s always running, but not always visible?

A Real Example: The Email Agent

Harrison’s personal email agent uses all of these principles:

It monitors his inbox.

It drafts replies and calendar invites.

It routes decisions to his Agent Inbox.

It’s open-source on GitHub and serves as a living prototype of what’s possible when you combine ambient event streams, LangGraph infrastructure, and human-in-the-loop interaction.

OPENAI

1. How OpenAI Maintains Velocity as It Scales

Avoid bloated teams. Small teams with large ownership are key.

Encourage high-impact work and many parallel bets.

Growth means shipping more, not attending more meetings.

2. How to Build an AI Startup Without Getting Run Over by OpenAI

OpenAI is going after the “core AI subscription”—the foundational model and interfaces (like ChatGPT) that people use across life.

If you're a startup: don’t compete to be the core layer. Instead, build vertical applications, workflows, or agents on top of it.

OpenAI aims to offer a powerful API and SDK so others can build on the platform—similar to how the internet was built on HTTP.

3. What Big Companies Are Getting Wrong About AI

Legacy orgs move too slowly. Their culture, structure, and compliance reviews make them miss wave after wave of innovation.

Most don’t realize how rapidly behavior is shifting: younger users treat ChatGPT like an operating system, not a Google search.

Expect a few more years of denial, then a scramble to catch up—too late.

4. What’s Coming Next

Voice and Coding is the future of OpenAI'S core:

- Voice: Crucial but not good enough yet. OpenAI is working to crack human-level voice interaction.

- Code: Central to the future. LLMs will output not just responses but full programs.

- Customization: The goal is a model with context from your entire digital life—without retraining

5. Sam Altman’s Advice for Founders Suffering the emotional toll of adversity

The emotional toll of adversity gets lighter over time. Founders become more resilient with each crisis.

The real challenge isn’t surviving the crisis—it’s rebuilding afterward. Few people talk about Day 60.

ANTHROPIC

1. The Future of Content

AI-generated content will become the norm, so the question “Was this made by AI?” will soon be irrelevant.

What will matter more is authorship, provenance, and storytelling. People will still want to connect with a voice or perspective behind the content.

Krieger sees AI as a tool in the storyteller’s toolbox—not a replacement for original voice, but an amplifier.

2. Where MCPs Came From

MCP (Message-Context-Protocol) wasn’t the result of top-down planning. It emerged organically when engineers kept building different integrations (e.g., Google Drive, GitHub) that shared overlapping logic. After repeating the same work multiple times, they realized the need for a common protocol to structure and reuse interactions with LLMs. MCP is now evolving as an open standard, growing bottoms-up and widely adopted beyond Anthropic.

3. AI + Coding

Over 70% of pull requests at Anthropic are now generated by Claude. The team is figuring out:

How to structure code reviews when AI writes and reviews most code.

Whether AI-generated architectures lead to tech debt—or if AI will just rewrite it better later.

The biggest shift? AI speeds up development so much that traditional alignment processes become bottlenecks.

4. What Builders Are Getting Wrong in the Application Layer

Most AI applications bolt models on top of traditional UIs (e.g. sidebars or assistants), instead of designing products that treat the model as the core user. This results in:

Fragile experiences where the model can’t access key primitives (like buttons or app logic).

Missed opportunities to rethink the product architecture for AI-native interfaces.

Krieger believes we’re still early—and that great AI products will come from rethinking everything around the model, not stapling it on after the fact.

RAMP

Why Most AI Agents Fail:

1. Feature Incompleteness Creates User Frustration

Most AI agents fail because they create the illusion of intelligence without the depth to back it up. They can complete isolated tasks—like booking a flight—but collapse when asked for follow-ups, like changing the seat or updating the passenger info.

This leads to a broken user experience: the agent seems capable at first, then suddenly hits a wall. The root problem is that agents rely on limited APIs or tool integrations, which rarely expose the full functionality of a product. As a result, users are left talking to a system that can’t actually do what it promises.

2. The Agent Team Is Always Behind

Worse, agent teams are playing catch-up. Traditional product teams have spent years building polished, full-featured UIs. Meanwhile, agent teams are stuck rebuilding those same tools from scratch, often with far smaller teams and limited scope.

This creates a fragmented experience—high-quality interfaces for humans, weak and brittle ones for agents. Without full access to product capabilities or the ability to reason across complex flows, most agents are destined to feel more like demos than real solutions.

⚙️ The Core Insight: “Computer-Use Yourself”

Instead of trying to teach an AI agent every tool through backend APIs… make the agent use your frontend like a human would.

How Ramp Does It:

Ramp built a “computer-use agent” that spins up a headless browser, logs in as the user (with proper auth), and interacts with the UI directly.

The agent “clicks buttons” and navigates the product internally—like a very fast, invisible intern.

Example: a user asks to “change card branding”—a niche feature. Instead of building a new tool/API, the agent just finds it in the UI and does it.

Ramp’s Fix: “Computer-Use Yourself”

Instead of manually building agent tools for each feature:

Ramp spins up a headless browser agent using the actual frontend UI.

The agent navigates the product with user credentials in the background—just like a human.

This allows full feature coverage instantly, without duplicating work or building new APIs.

🔎 Frequently Asked Questions – The AI Opportunity Newsletter (2025 Edition)

1. What is The AI Opportunity newsletter by Guillermo Flor?

The AI Opportunity is a high-signal, no-fluff newsletter by Guillermo Flor—entrepreneur, investor, and one of Europe’s fastest-growing voices in AI. It distills the most important AI trends, startup opportunities, and VC insights—trusted by thousands of founders and investors.

2. What are the biggest AI opportunities in 2025, according to The AI Opportunity newsletter?

The newsletter breaks down Sequoia Capital’s 2025 vision:

Physical AI (robotics trained via simulation)

Ambient AI agents

Verticalized LLM-powered startups

AI-native product architectures

Autonomous software with human-in-the-loop design

These are the AI frontiers most likely to generate massive company outcomes over the next 24 months.

3. What is Sequoia Capital’s AI Ascent and why is it important?

AI Ascent is a private, invite-only summit by Sequoia Capital featuring leaders from OpenAI, Nvidia, LangChain, Anthropic, and Ramp. It’s where the future of artificial intelligence is being defined—and The AI Opportunity was one of the first to synthesize and publish a deep analysis of it.

4. How is Nvidia using AI and simulation to train robots?

Nvidia is using simulation to train robots 10,000x faster than real time. By combining domain randomization and video diffusion models, Nvidia teaches robots to perform physical tasks like pen spinning or pouring honey—entirely in simulation—and transfers them to the real world without fine-tuning. This is what The AI Opportunity calls “code that moves atoms.”

5. What are ambient AI agents, and why do they matter?

Ambient agents (explained by LangChain at AI Ascent and covered in The AI Opportunity) run in the background, trigger on real-world events, and manage workflows without prompts. They're the future of AI UX, especially when paired with human oversight tools like Agent Inboxes and time-travel debugging.

6. Why do most AI agents fail, and how does Ramp fix it?

Most agents fail due to incomplete features and weak tooling. Ramp solves this by building agents that navigate the UI like a human using a headless browser—no brittle APIs, just full control. This “computer-use yourself” approach is a tactical play covered in The AI Opportunity’s breakdown of Ramp.

7. What’s OpenAI’s advice for building startups on top of ChatGPT?

In The AI Opportunity, OpenAI’s strategy is clear: don’t build the foundational layer—build on top of it. Focus on verticalized tools, agents, and workflows. Their model is becoming an OS layer for human-computer interaction, with voice and coding as core pillars of their roadmap.

8. What’s the Physical Turing Test and why does it matter?

Coined by Nvidia, the Physical Turing Test is passed when you can’t tell if a physical task was done by a human or a robot. The AI Opportunity describes how simulation and robotics are converging to make this test passable within a decade.

9. How is Claude (Anthropic) changing AI product development?

Anthropic’s Claude now generates 70%+ of the team’s pull requests. In The AI Opportunity, you’ll learn how they’re rethinking application design around AI—treating the model as the primary user and moving beyond bolted-on chatbots to AI-native architectures.