Is AI Slowing down? The new bottleneck and the AI Career Opportunity by Andrew Ng

AI isn’t slowing down, it’s moving the bottleneck from code to product

A weird thing happened this year.

AI got so mainstream that people started asking the most dangerous question in tech:

“Is AI slowing down?”

You’ll hear it framed as:

“Are the next models even that much better?”

“Was GPT-5 worth the hype?”

“Are we hitting a wall?”

But that is the wrong yardstick.

If your benchmark is “100% perfect answers,” progress must look slower because you can’t go above 100%.

Subscribe to the AI Oppportunity

Other resources for founders & vcs:

- The Data Room Template VCs love

- 27 Most Promising AI Startup Pitch Decks Backed by Top Investors in 2025

But a better benchmark is: how complex of a task can AI complete end to end?

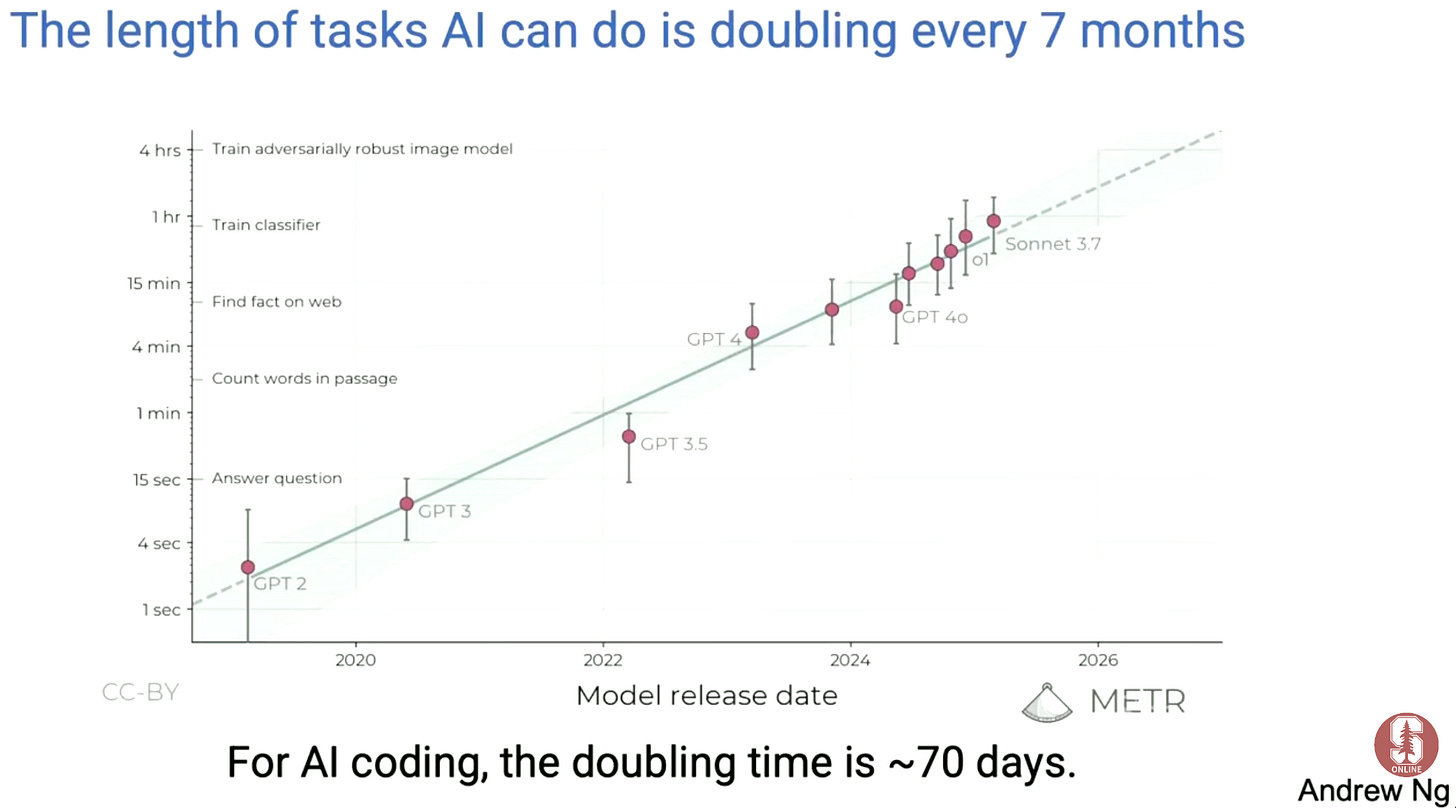

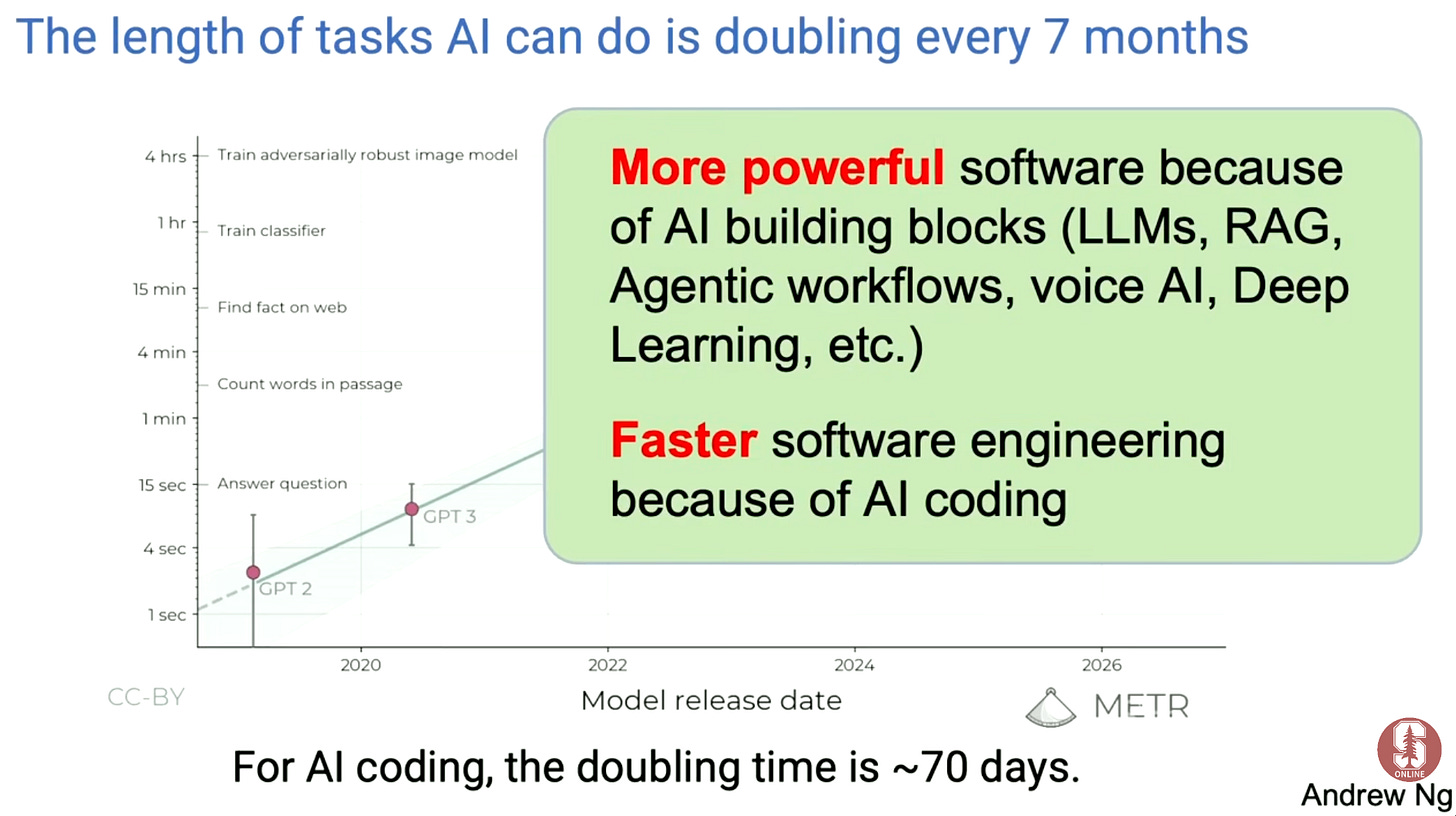

One study Andrew Ng referenced (from METR) frames progress as “task length a human could do,” and argues that the complexity of tasks AI can handle has been doubling on a ~7-month cadence, with coding doubling even faster (he cited a much shorter doubling time for coding).

That aligns with what builders feel day to day: models are not just “smarter,” they’re expanding the surface area of what one person can ship.

So no, AI isn’t slowing down in the way that matters.

It’s shifting the bottleneck.

And that shift is the opportunity.

1) The golden age is not “models,” it’s leverage

Andrew’s core point is simple:

The building blocks are more powerful than ever: LLMs, RAG, agentic workflows, voice, and deep learning tooling you can stand up quickly.

The speed of production is accelerating: AI coding tools improve fast enough that being half a generation behind can make you meaningfully less productive.

The meta lesson: your advantage comes from stacking leverage.

Not from memorizing the latest benchmark chart.

If you can combine (1) modern model capabilities with (2) modern coding acceleration, you can build software that would have taken a team, not a person, very recently.

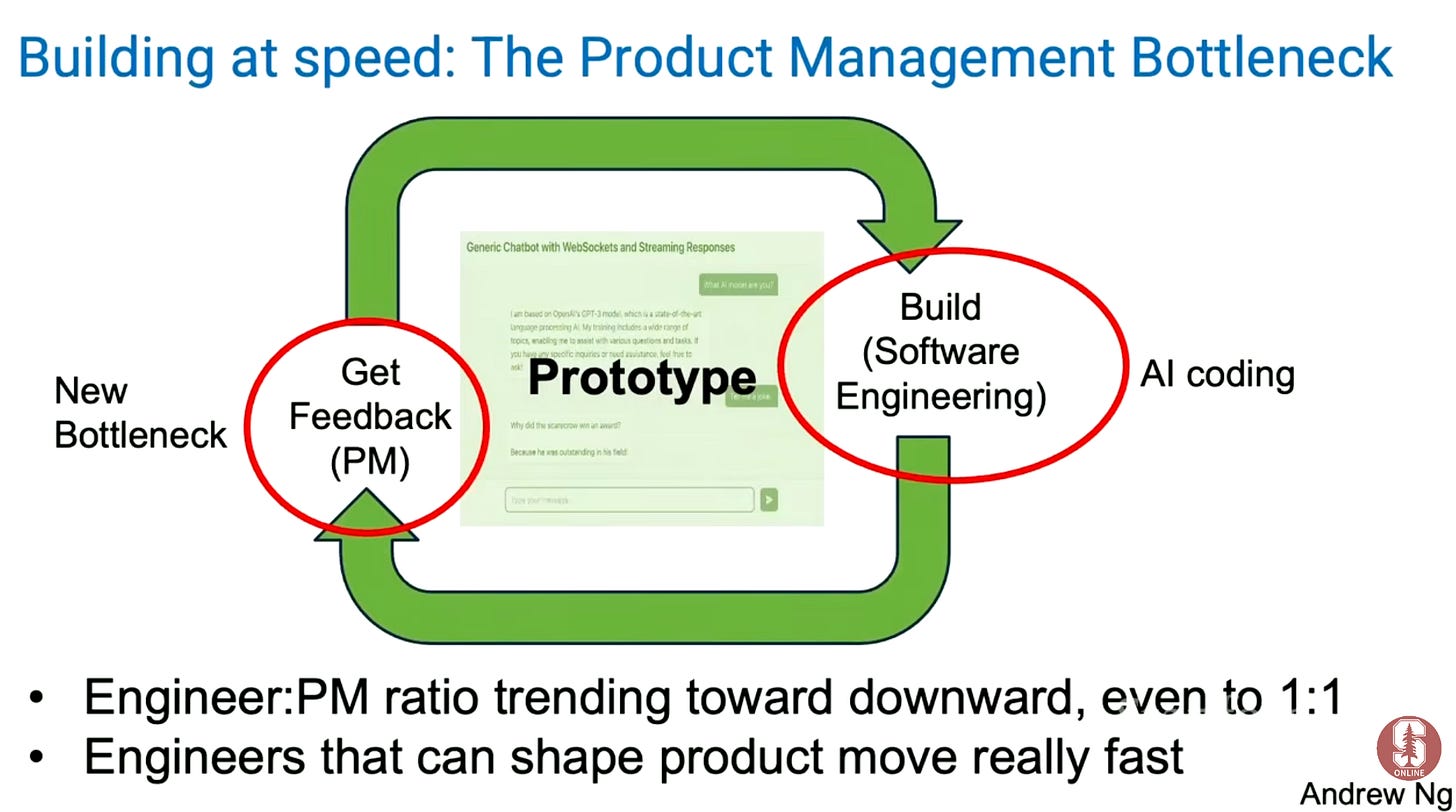

2) The new bottleneck is deciding what to build, not code

As engineering gets cheaper, the scarce resource becomes:

Knowing what to build, writing the spec clearly, and iterating with users.

Andrew described the loop every winning product follows:

Build

Show users

Learn what they actually do and don’t want

Update the spec

Repeat fast

AI makes step (1) dramatically cheaper.

So steps (2)–(4) become the differentiator.

This is why many teams are seeing the classic engineer-to-PM ratio compress. In extreme cases, it trends toward 1:1, not because PM got more valuable, but because iteration speed punishes ambiguity.

Career implication: the highest-velocity people now are often engineers who can also do product:

talk to users

write crisp specs

make decisions with empathy

ship again immediately

If you can collapse “PM → engineer” into one person, you move at startup speed inside any company.

The AI Career Opportunity

1) The market is production-first now

There are still opportunities, but companies shifted from:

“build something cool”

to:“ship something useful into production, reduce risk, move a metric”

2) The new valuable role is “trusted advisor”

The person who:

filters signal vs noise

starts with “why”

turns hype into measurable outcomes

ships and iterates

3) Code generation increases leverage and debt

AI can produce code fast, but it also produces technical debt fast.

The winners will be the builders who can:

evaluate generated code

structure it

own reliability

manage the debt intentionally

4) Stop building “agents.” Start with the metric.

“Build me an agent” is not a requirement.

It’s a symptom.

The right starting point is always:

what outcome are we changing?

what’s the baseline?

what does success look like?

5) The next wave is Big AI vs Small AI

We’re bifurcating:

Big AI: hosted frontier models

Small AI: self-hosted / on-device models where privacy, latency, and cost dominate

That shift unlocks entire markets that won’t ship sensitive IP to third parties (legal, medical, finance, regulated enterprise, media workflows). The skills that compound there: fine-tuning, evals, deployment, optimization, privacy-preserving systems.

Hope this was valuable.

Cheers,

Guillermo