Andrej Karpathy Breaks Down the 2025 State of AI: 12 Things Founders & VCs Must Know

Andrej Karpathy: Why AI Agents Won’t Happen This Year (But Will Define the Next Decade)

Yesterday Andrej Karpathy shared down his vision of AI.

I couldn’t let it pass so i broke it down for all of you to understand what’s the presenta and future artificial inteligence.

Enjoy

Welcome to The AI Opportunity: be the first to learn about where tech is going to be impacting business.

Subscribe for premium access & email me at g@guillermoflor.com to Sponsor the Newsletter

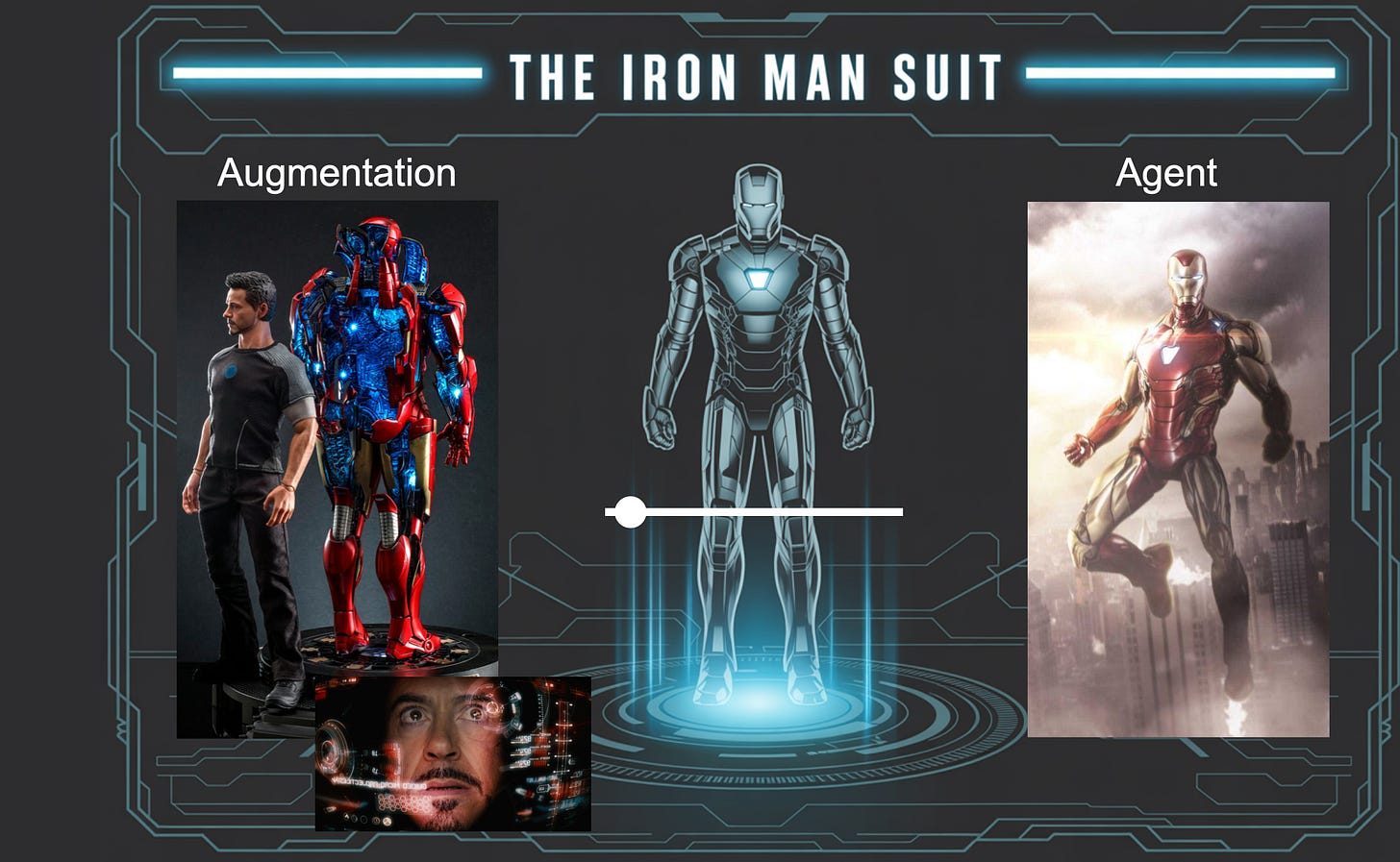

1. Agents aren’t happening this year, they’re a decade project

Karpathy pushes back on the phrase “the year of agents”.

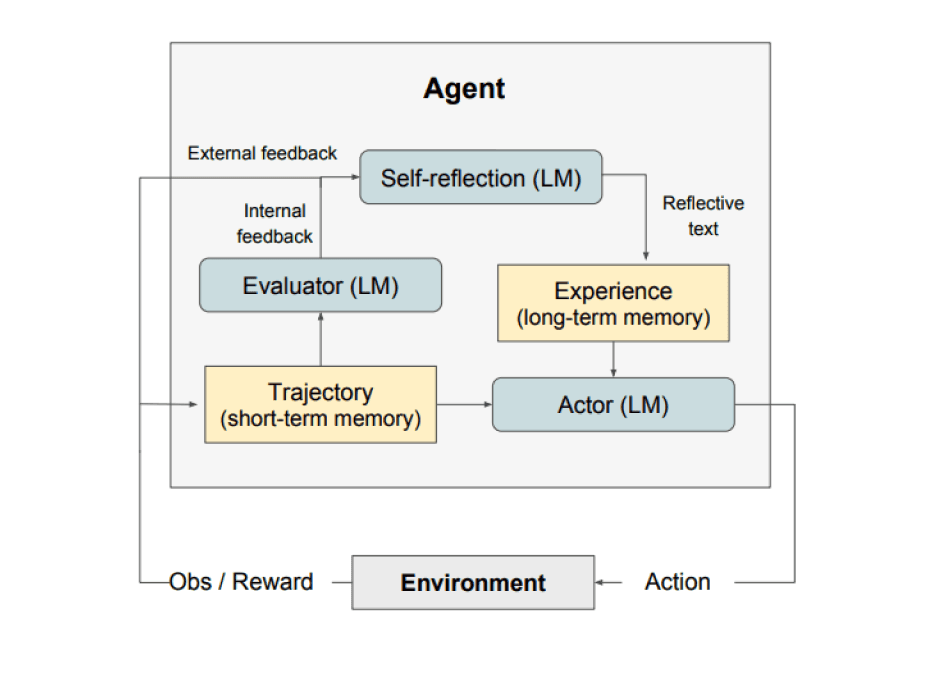

He says we should think of this as the decade of agents.he reason is that current models lack critical infrastructure: no strong memory, no reliable multi-modal perception, minimal continual learning, limited ability to use computers and act in the world.

We have prototypes, but not yet coworkers. He argues that building that kind of agent takes a marathon of engineering, not a sprint.

2. Three eras of software tell us what’s real

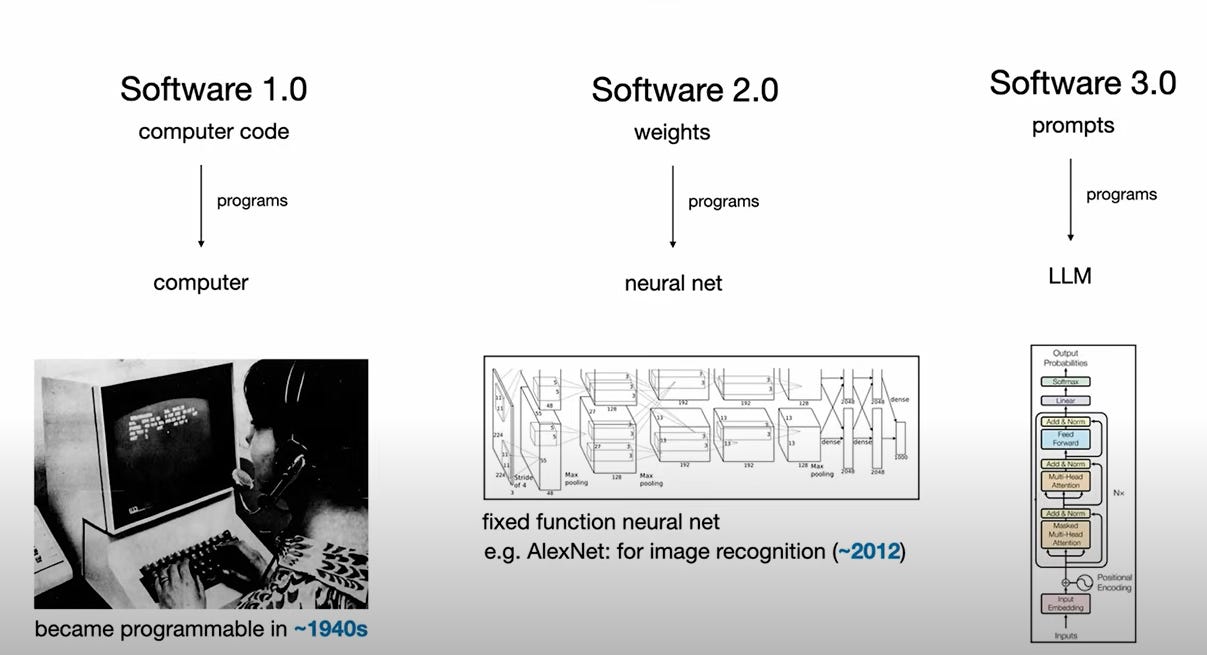

Karpathy frames software evolution in three phases: traditional code (Software 1.0), neural net weights (Software 2.0), and now natural-language + LLM interfaces (Software 3.0).

The jump to Software 3.0 means we’re redefining what programming means. He argues that trying to build full agents too early (before the underlying representation and reasoning capabilities were in place) was a mistake. We needed the representation era (LLMs, large pre-trained models) first, then we can build agents.

3. We’re not building animals, we’re building ghosts

One of his strongest analogies: we aren’t evolving biological intelligence; we’re training models that simulate human‐like behavior. He says animals are shaped by evolution over millions of years and have hardware and instincts baked in.

AI models, by contrast, are “ghosts” of human behavior, data-driven, lacking embodiment, no instinct. He calls pre-training “crappy evolution”, a practical shortcut. That distinction matters because it sets expectations for what these systems can and cannot do.

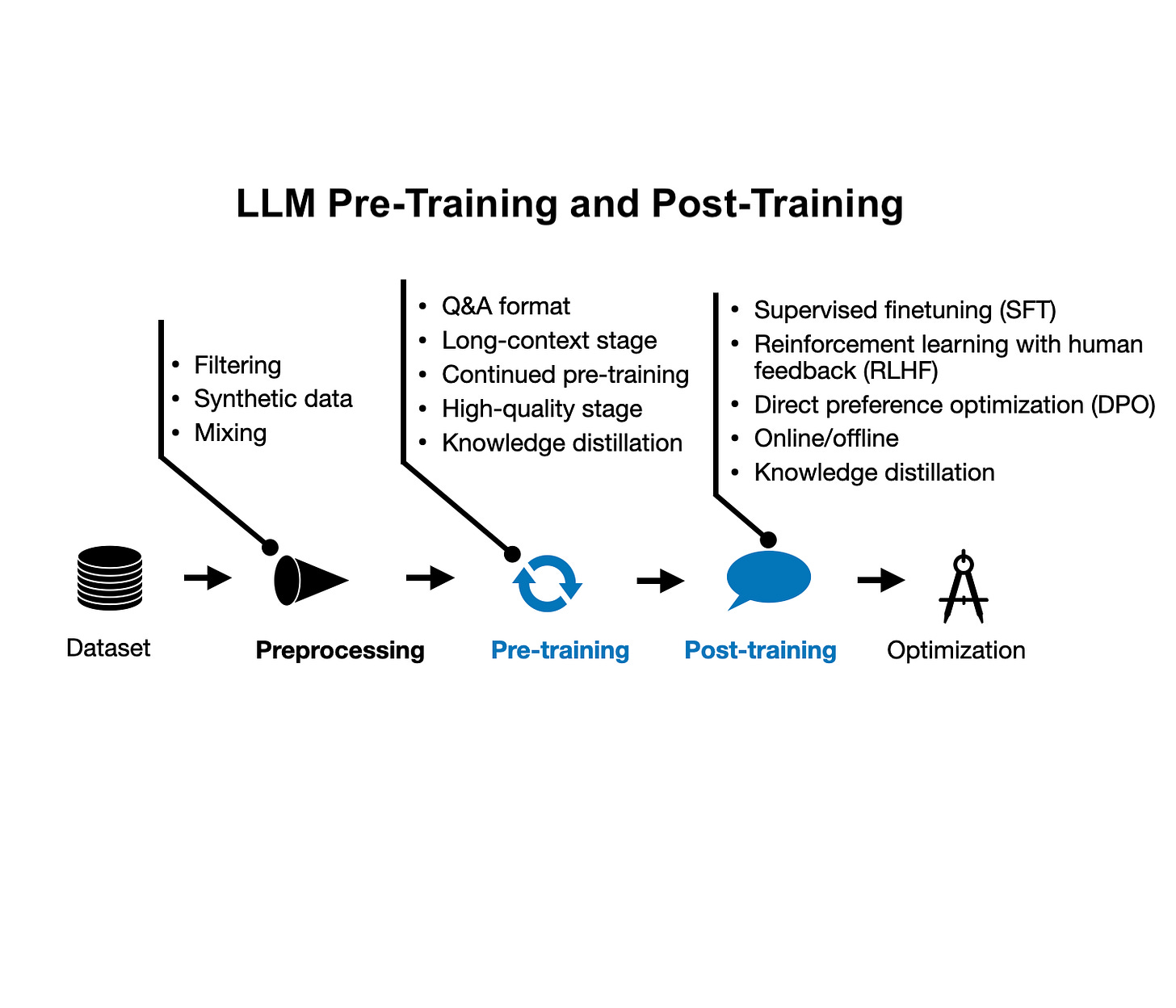

4. Pre-training builds both knowledge and intellect, but only the latter scales toward generality

He points out that when we pre‐train large models we’re doing two things: absorbing facts and building reasoning algorithms.

Karpathy emphasizes that what really matters for general intelligence is the “cognitive core”, the reasoning and abstraction machinery, more than every fact or piece of knowledge.

If you focus only on memorizing more and bigger models you may miss the point of generality.

5. The context window is where the reasoning happens

He uses the analogy that model weights are like long‐term memory: vague and compressed recollections of training data.

The context window (what you feed the model in prompt form) is like working memory: this is where it actually reasons and responds. He says when you give a model full context (say, a full chapter or full data) instead of a summary, the model suddenly “knows” what’s going on far better.

That implies for product builders: you should think about feeding rich context, not relying purely on weights.

6. We’ve built only part of the brain analogue so far

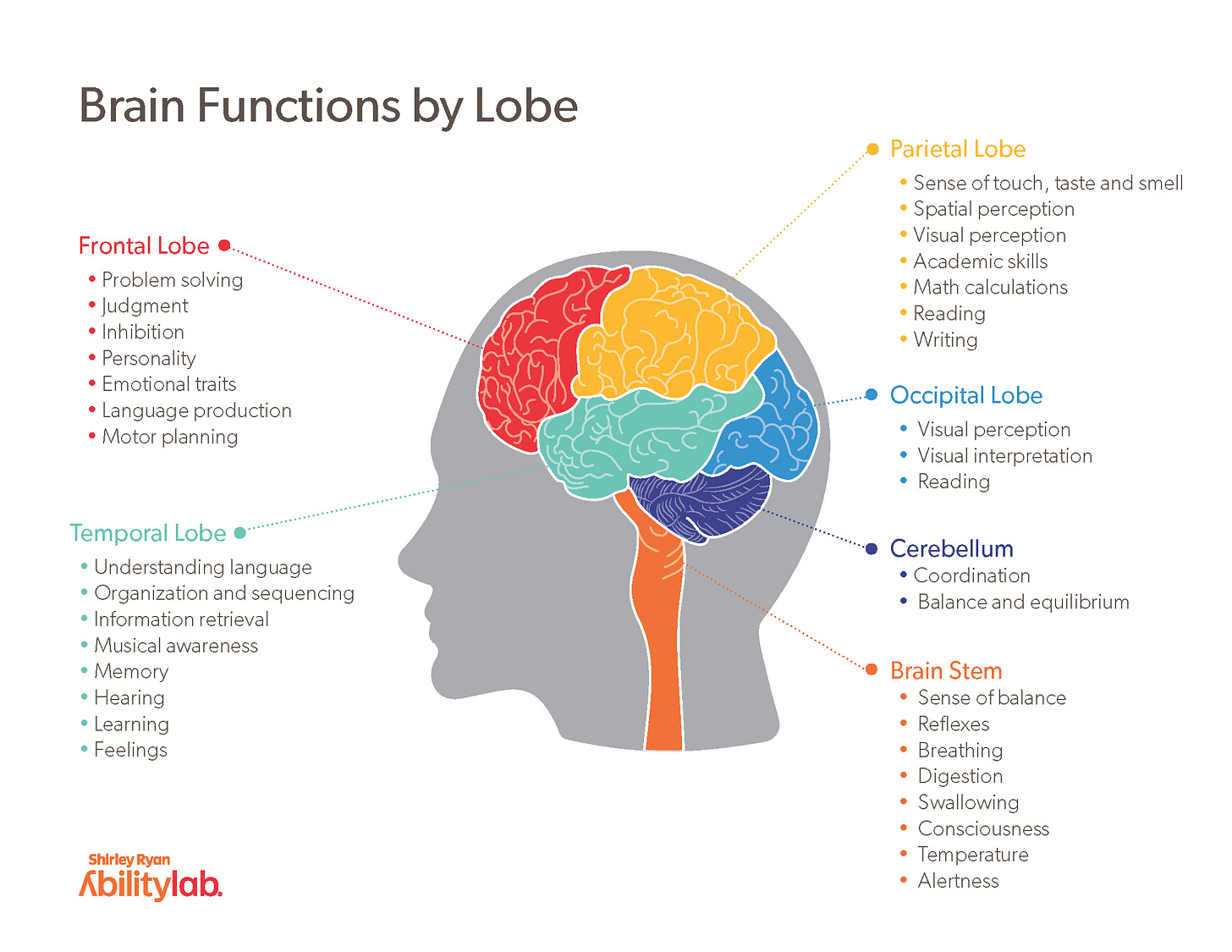

Karpathy draws parallels to human brain structures: transformers ≈ cortex (pattern‐recognition and flexibility), reasoning traces ≈ prefrontal cortex (planning and abstraction).

But he says we’re missing other parts: the hippocampus (memory consolidation), amygdala (emotions/instincts), cerebellum (skill coordination).

The upshot: today’s AI may think but it doesn’t feel, it doesn’t embed in the world, it doesn’t self‐reflect like humans yet.

7. Building matters more than prompting or talking about it

Karpathy stresses that to understand a system you must build it. His project NanoGPT was coded mostly by hand. He found that coding models (agents writing code) were good only for boilerplate; for novel systems they failed.

For now he prefers “autocomplete” tools to keep control and understanding.

His mantra: If I can’t build it, I don’t understand it.

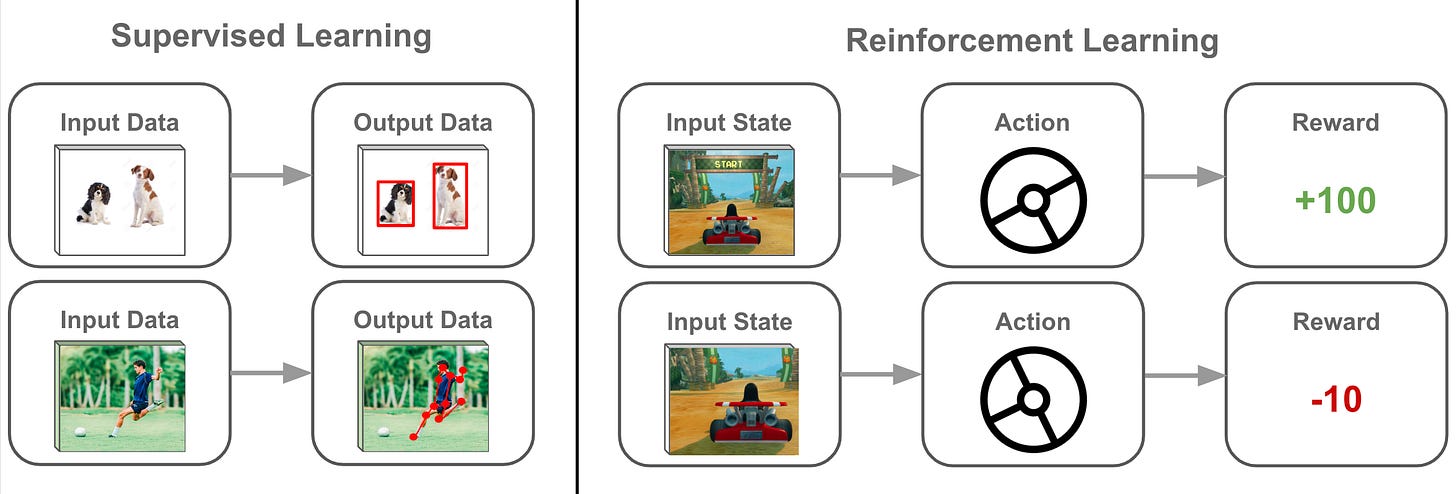

8. Reinforcement learning (RL) is inefficient and flawed, though still necessary

He calls RL “terrible” in the sense that it’s very inefficient: you execute thousands of actions and only a single reward at the end tells you whether you succeeded.

Many wrong actions might get rewarded simply because the final output was correct. Humans don’t learn like that. We reflect, break down our steps, review what went right or wrong.

He says future systems need feedback at every step, not just at the end.

9. The next breakthrough is process‐based supervision and reflection loops

He predicts that to get beyond the current plateau we need systems that don’t just output but reflect: review their own outputs, self‐correct, generate synthetic training by themselves, preserve entropy (not collapse into repetition).

He warns that current models are “silently collapsed” into low‐entropy repetition. For builders that means designing workflows where models iterate, reflect, self‐train, instead of just being prompted.

10. Forgetting might be as important as memorising

He makes a subtle but powerful point: humans are bad at memorizing and that forces us to generalise. Models are good at memorising which can make them rigid.

He argues that forgetting some data can free the system to reason better.

For product designers that means building models that don’t just store facts but learn how to think, how to abstract, how to generalise beyond the data.

11. Bigger models aren’t everything, data quality matters more

He says the internet is full of garbage: spam, broken text, noise. Yet we train huge models on it and they still work. So imagine what you could do with clean, curated, meaningful data. He speculates that a cognitive core with maybe ~1 billion parameters trained on high‐quality data might already feel very intelligent. That suggests for startups: innovation may lie in data quality and architecture, not simply “bigger model size”.

12. We’re in the early days, not at the finish line

His tone is tempered but optimistic: we’re not at the end of AI’s evolution, we’re still mid‐build.

The architecture will evolve, but not in radical leaps, rather incremental compounding improvements: better data, smarter algorithms, more brain‐like modules.

The transformer may still be around a decade from now, just refined. For founders that means this is a build window, not a hype window.

Cheers,

-Guillermo

FAQs “Karpathy: The Decade of Agents”

1. Why does Andrej Karpathy say AI agents are a “decade project,” not a 2025 breakthrough?

Karpathy argues that current AI lacks the key infrastructure to become true “coworker-level” agents — such as reliable memory, multi-modal understanding, and the ability to act continuously in the world. He sees agent development as a marathon of engineering rather than a sprint.

2. What are the three eras of software Karpathy describes (Software 1.0, 2.0, 3.0)?

He frames software evolution in three phases:

Software 1.0: Hand-written code.

Software 2.0: Neural networks (code written as weights).

Software 3.0: Natural language interfaces and LLMs.

This marks a redefinition of “programming” — from explicit instructions to emergent reasoning.

3. What does Karpathy mean by saying “we’re building ghosts, not animals”?

Karpathy draws a distinction between biological evolution and AI training. Animals evolve instincts and embodiment over millions of years, whereas AI models are “ghosts” of human data — they simulate intelligence without real-world grounding, instincts, or embodiment.

4. How does pre-training affect an AI model’s reasoning vs. knowledge?

According to Karpathy, pre-training builds both knowledge (facts) and intellect (reasoning). True general intelligence depends more on the cognitive core — abstraction, reasoning, and problem-solving — than on storing vast factual data.

5. Why is the context window critical for AI reasoning?

The context window functions as the model’s working memory. While weights store long-term knowledge, real reasoning happens within the context you feed it. Giving a model full, rich input improves understanding far more than simply relying on pretraining.

6. Which parts of the human brain are still missing in AI systems?

Karpathy likens transformers to the cortex but says AI lacks analogues to other critical brain regions:

Hippocampus (memory consolidation)

Amygdala (instincts and emotion)

Cerebellum (coordination and skill)

AI can think, but not feel or self-reflect — yet.

7. Why does Karpathy insist on “building over prompting”?

He believes that to truly understand a system, one must build it. Coding hands-on reveals what current models can and can’t do — for now, they excel at boilerplate but fail at novel system design. “If I can’t build it, I don’t understand it.”

8. What are the limitations of reinforcement learning (RL) according to Karpathy?

He calls RL “terrible” because it’s inefficient — rewarding only the final outcome, not the process. Humans learn by reflecting and adjusting at every step. Future AI must include process-level feedback, not just end-result scoring.

9. What is “process-based supervision” and why does Karpathy see it as the next breakthrough?

Process-based supervision means teaching AI to reflect, review, and self-correct rather than just generate answers. These reflection loops prevent “entropy collapse” — when models repeat low-information patterns — and help systems continuously improve.

10. Why might forgetting be essential for better reasoning in AI?

Humans’ limited memory forces us to generalize. Models that remember everything risk rigidity. Controlled “forgetting” could help AI generalize and reason abstractly — prioritizing thinking over memorizing.

11. Why does Karpathy believe data quality may beat model size?

He argues that massive models trained on noisy internet data are wasteful. A smaller model (~1B parameters) trained on clean, curated data could feel far more intelligent. For startups, the edge may come from better data, not bigger compute.

12. What’s Karpathy’s outlook on the future of AI architecture?

He sees the current moment as mid-journey, not the finish line. Progress will come from iterative refinement — better data, architectures, and modular brain-like functions. The transformer may stay dominant for another decade, evolving steadily.