AI Davos: What Happens After AI Takes Over the World?

What Davos revealed about AGI, platform shifts, robots, and why intelligence is no longer the bottleneck.

Hey everybody, this week was WEF in Davos.

World’s most influential people share their thoughts and wants about the future.

I broke down the more relevant ones regarding AI:

The Day After AGI, Dario Amodei

Satya Nadella & Larry Fink on AI: AI is a platform shift

Alex Karp: AI exposes institutions that don’t actually work

Elon Musk: AI future is robots

Conclusions: what we can make of these talks in practice.

Sponsor The AI Opportunity & get your product in front of thousands of founders worldwide? Reach out to g@guillermoflor.com

Trending right now: The Sequoia AI Ascent Deck

1) Talk #1: “The Day After AGI” (Dario Amodei + Demis Hassabis)

The speed of AI progress depends on whether the self-improvement loop closes

Everything in their conversation revolved around a single question:

Can AI meaningfully help build better AI, fast enough, without humans doing most of the work?

Dario’s view is straightforward:

models already write large amounts of production code

the next step is end-to-end software work

once that happens, AI accelerates the creation of the next model

progress compounds

He’s not making a philosophical AGI claim.

He’s describing an engineering feedback loop.

Demis agrees the direction is real but highlights where the loop breaks:

coding and math are easy because output is verifiable

science, biology, and theory creation are not

physical experiments, chips, and robotics introduce friction

So the disagreement isn’t about intelligence.

It’s about how much reality slows things down.

Why this matters

If the loop mostly closes, timelines collapse.

If it only partially closes, progress is still dramatic but more linear.

Either way, society is not ready for the speed they’re discussing.

Plain-language version

If machines start helping us build better machines, everything speeds up.

If they still need humans and the physical world, things slow down.

2) Satya Nadella & Larry Fink

AI is not a feature. It’s a platform shift that rewrites how work, companies, and economies are structured

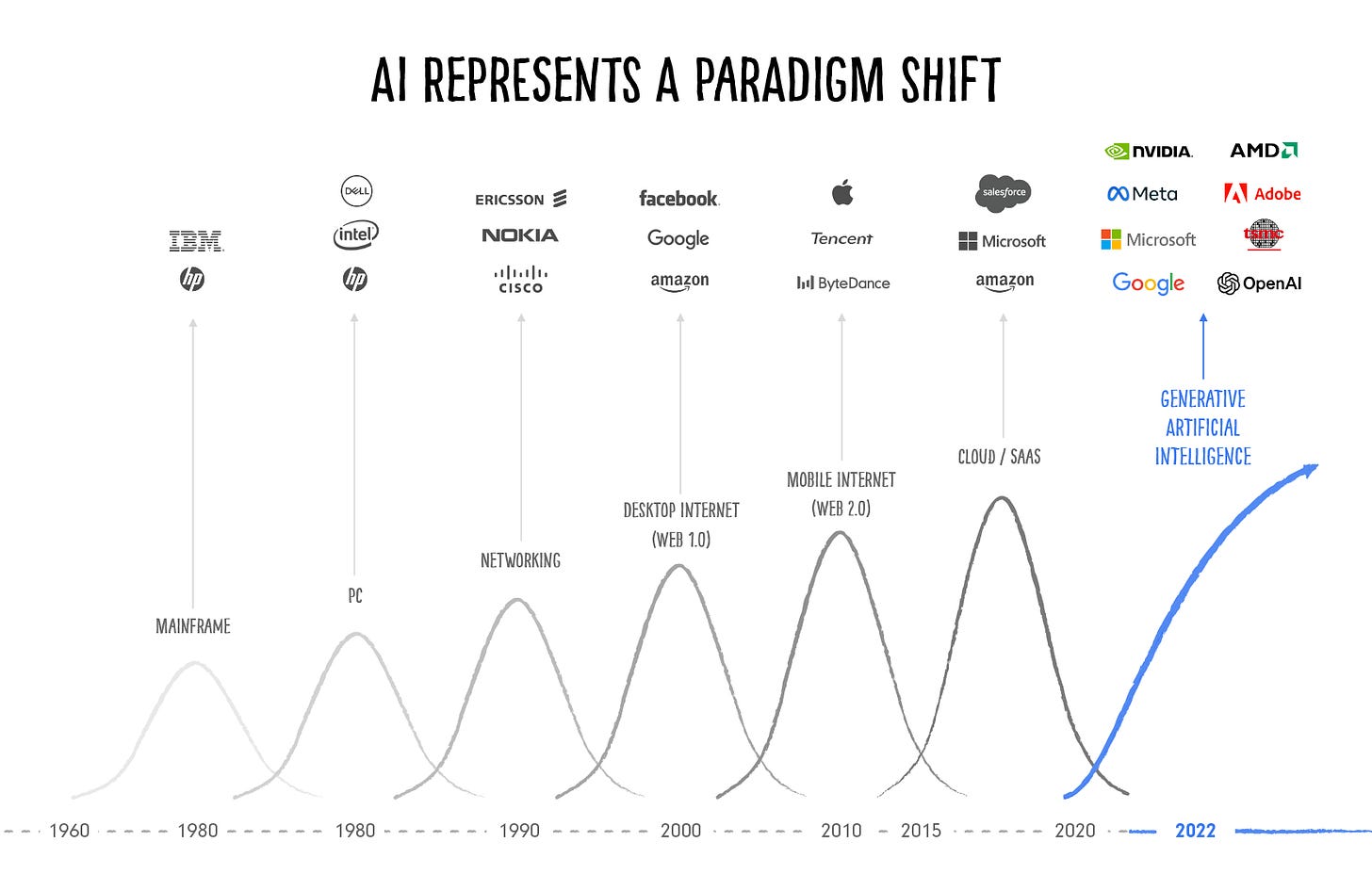

Satya is placing AI on the same historical arc as the PC, the internet, and the cloud.

That framing matters, because platform shifts don’t add efficiency — they reorganize society.

What does “platform shift” actually mean?

A platform shift has three characteristics:

It digitizes something fundamental

It lowers the marginal cost to near zero

It creates entirely new workflows and power structures

The PC digitized individual productivity.

The internet digitized information access.

The cloud digitized infrastructure.

AI digitizes reasoning itself.

That’s why Satya keeps returning to the same idea:

Once something is digitized, software can reshape it endlessly.

Why this is bigger than “AI tools at work”

Most people interpret AI as:

faster emails

better search

nicer dashboards

That’s missing the point.

Satya’s example about software development tells the real story:

first: code completion

then: conversational programming

then: agents

then: end-to-end project execution

This isn’t “better tooling.”

It’s changing the level of abstraction at which humans work.

Just like:

assembly → C → Python

documents → websites → apps

AI collapses the distance between intent and execution.

You don’t “build software” anymore.

You describe outcomes and software emerges.

The invisible consequence: organizations flatten

Satya slipped this in almost casually, but it’s one of the most important implications.

When AI can:

instantly synthesize context

pull information across silos

brief anyone on anything

Then information no longer needs to:

travel up hierarchies

pass through layers

be curated by middle management

The classic organizational pyramid starts to break.

The consequence of the AI platform shift is that reasoning becomes cheap, abundant, and embedded everywhere, which quietly rewires how work, companies, and economies function.

When reasoning is software, the distance between intent and execution collapses: fewer people are needed to produce the same output, hierarchies flatten because information no longer needs to flow upward, and advantage shifts from “who knows more” to “who can turn knowledge into action fastest.”

For individuals, this means skills compound faster but ladders thin out; for companies, it means workflows matter more than roles; and for countries, it means productivity, energy, and diffusion determine competitiveness.

It doesn’t feel like a revolution at first, but once it spreads, everything built for a slower, human-only reasoning world starts to break.

A concrete example: insurance underwriting (but this applies everywhere)

Before the platform shift

An insurance company looks like this:

junior analysts gather data

mid-level underwriters review cases

seniors approve edge cases

decisions take days or weeks

a lot of value lives in people’s heads and emails

Reasoning is:

slow

expensive

hierarchical

bottlenecked by humans

This structure exists because reasoning is scarce.

After the AI platform shift

Now imagine:

AI ingests all documents instantly

evaluates risk across thousands of variables

flags anomalies

explains why a decision was made

escalates only truly ambiguous cases

Suddenly:

1 senior underwriter oversees what 10 teams used to

juniors are no longer required for data prep

decisions happen in minutes

the organization flattens

Nothing “magical” happened.

Reasoning just became software.

Profit comes from collapsing cost structures

If you remove:

manual review

duplicated effort

waiting time

error correction

You get:

massive margin expansion

higher throughput

lower risk

This is why AI winners look boring on the surface.

They quietly print money.

3) Alex Karp

Conclusion: AI exposes institutions that don’t actually work

AI doesn’t reward “ideas,” it rewards institutions that actually work under real conditions and it brutally exposes the ones that only work on PowerPoint.

His whole Ukraine example is basically a stress test: in the real world you lose connectivity, your data is messy, people have different clearance levels, you can’t reveal sensitive info, enemies jam signals, the battlefield changes daily, and you still need decisions that are fast, auditable, and aligned with strategy and ethics.

Most organizations (governments and big companies) aren’t built for that.

They think they are, but half their “systems” don’t exist in a deployable way. So his punchline isn’t “buy an LLM.” It’s: if you don’t build a structured layer that turns messy reality into reliable action (with accountability), AI will just amplify your chaos.

That’s why he keeps emphasizing ontology/structure and why Palantir wins in low-trust environments: not because they have smarter models, but because they can make the organization operational when reality is adversarial.

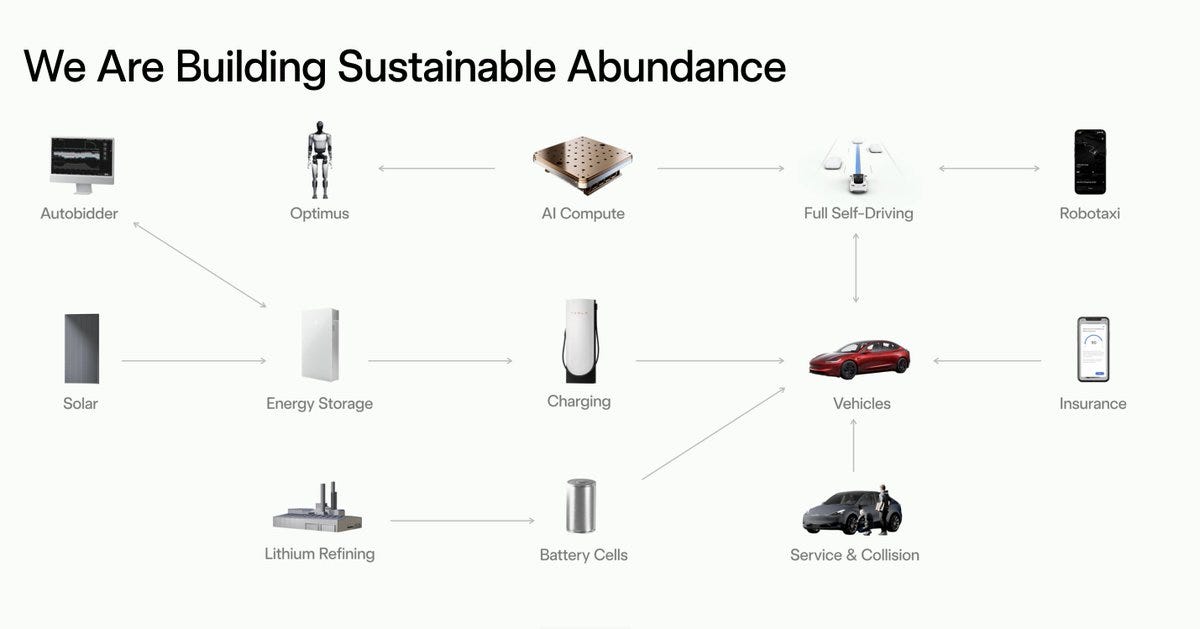

3) Elon Musk:

Elon’s talk is basically him telling you: most people are debating the wrong layer of the stack.

They argue about models and “who wins the AI race,” but his mental model is physics-first: energy, manufacturing, robots, space.

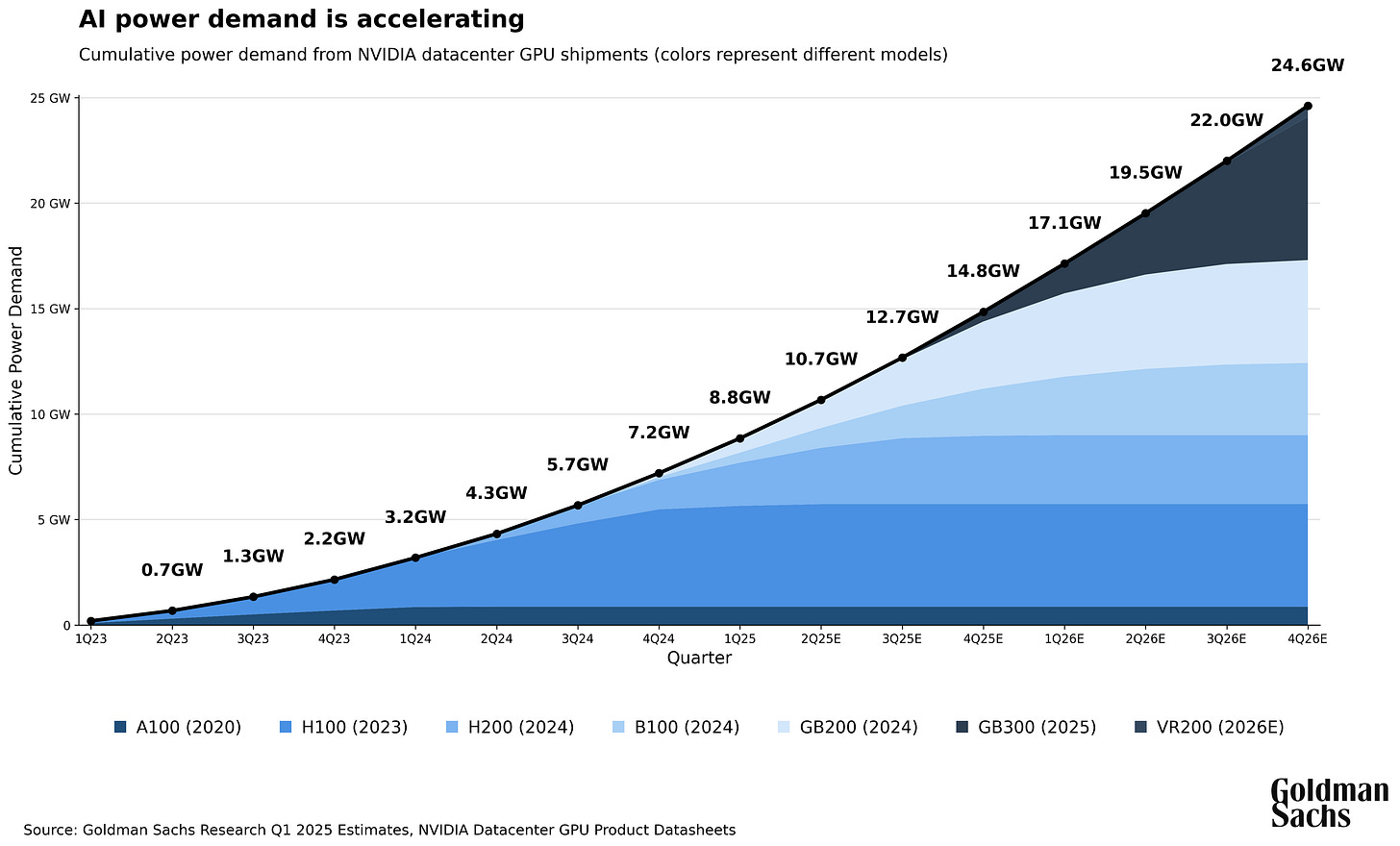

1) His core thesis: AI progress is going to be bottlenecked by electricity, not algorithms

The most important thing he said (and it’s not the “smarter than humans” line) is:

we’re going to produce more AI chips than we can power.

That’s a very specific worldview: compute supply is compounding fast, but grid expansion is slow (single-digit % per year). In his head, the limiting reagent of intelligence is watts.

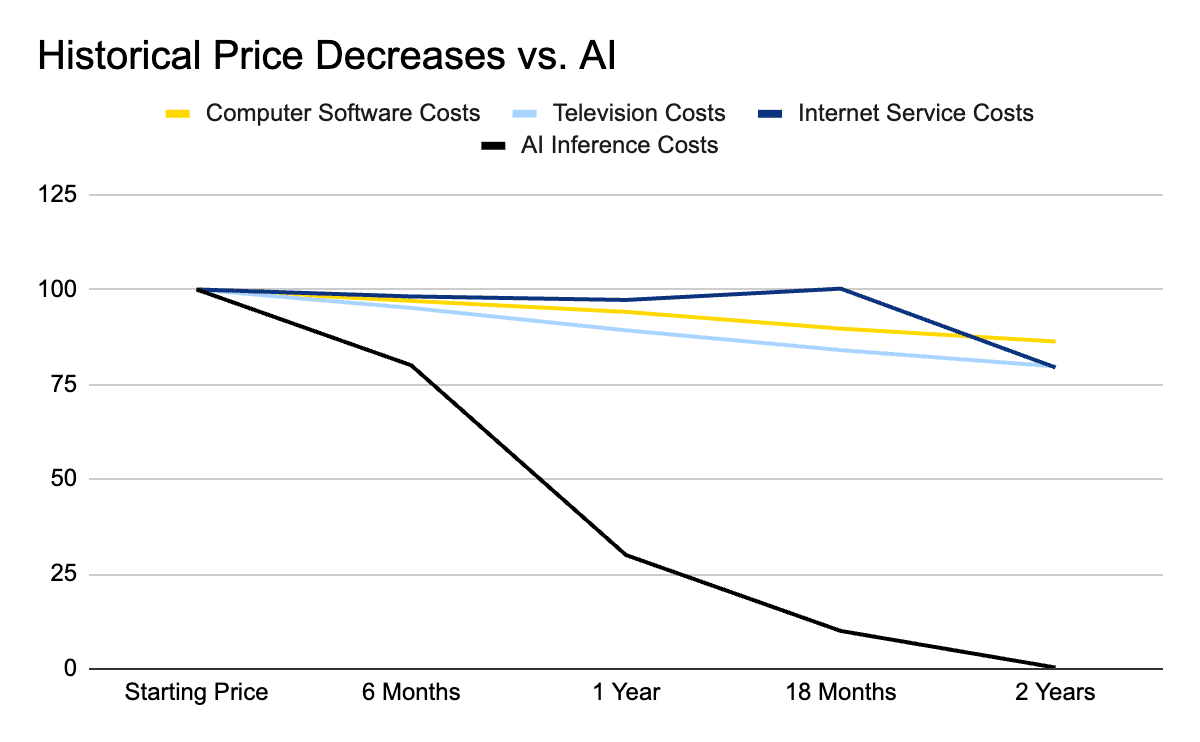

2) He sees “cost of AI” collapsing so fast that distribution becomes inevitable

He’s not framing AI as a premium product category. He’s framing it like:

closed models lead

open models follow ~1 year behind

prices drop constantly

So his assumption is: AI will be everywhere because the marginal cost keeps approaching zero. That’s why he’s confident the benefits “will be broad”: companies will chase every customer and costs will keep falling.

What’s hidden in that assumption: when intelligence becomes cheap, it stops being a moat. The moat shifts to what you can do with it (robots, factories, distribution, energy).

3) His “abundance” argument is not philosophical — it’s a simple equation

He reduces the economy to:

(number of humanoid robots) × (productivity per robot)

Then he predicts:

there will be more robots than humans

eventually you “run out of things to ask for” because needs are saturated

What to take seriously: he’s saying the endpoint isn’t “AI tools help workers.” It’s labor becomes optional for basic production. That’s a very different future than “productivity gains.”

4) The most underrated claim: “space will be the cheapest place to run AI”

This is the most non-obvious part of his talk and it’s pure engineering logic:

In orbit: solar is constant (no night/weather)

Cooling is easier (radiate heat into space)

You don’t fight land constraints or local grid bottlenecks

The sun is effectively the ultimate energy source

So he’s suggesting a future where:

AI data centers move off-planet because power + cooling dominate cost.

Even if his 2–3 year timing is optimistic, the strategic idea is real:

if energy is the bottleneck, you look for environments where energy is abundant and cooling is cheap.

5) He’s unusually confident on timelines (and that’s the part you should treat carefully)

He claims:

AI smarter than any human by end of this year / next year

smarter than all humanity combined by ~2030–2031

Whether you believe it or not, it reveals how he’s operating:

he’s planning as if capability jumps are imminent, and he’s aligning Tesla/SpaceX around that assumption.

What you should learn from that (even if you disagree): the serious builders are not waiting for certainty. They’re building infrastructure that only makes sense if intelligence becomes abundant.

6) His view on safety is “control the physical layer,” not “pause AI”

He gives the obligatory Terminator joke, but he doesn’t argue for slowing down. He argues for:

making robots safe and reliable before public deployment

scaling manufacturing and energy so abundance happens

His safety posture is: build it, but engineer it properly.

Not “stop it.”

What we can make of this

First: AI is no longer a product advantage, it’s a production function.

Satya’s point, when you compress it, is that reasoning has become infrastructure. That means AI stops being “a feature” and starts being something every workflow assumes exists, the same way every workflow assumes databases or cloud exist today. For founders, this kills shallow differentiation. If your startup’s edge is “we use AI,” you’re already late. The advantage moves to who redesigns work itself: fewer handoffs, fewer approvals, tighter feedback loops between intent and action. For VCs, this means the best companies won’t look flashy. They’ll look boring, fast, and structurally different in how work gets done.

Second: AI brutally exposes whether an organization actually works.

Karp’s entire talk is a warning disguised as a battlefield story. AI doesn’t magically fix bad systems; it stress-tests them. If your data is fragmented, your permissions are unclear, your processes depend on heroics, or your decisions can’t be audited, AI will make that obvious very fast. That’s why so many AI pilots stall. The company didn’t fail to “adopt AI.” It failed to face reality. As an investor, this shifts diligence away from demos and toward institutional readiness. As a founder, it means the unglamorous work—structure, ownership, accountability—is now a competitive moat.

Third: intelligence is becoming cheap; energy and execution are not.

Elon’s contribution cuts through the noise. The bottleneck isn’t models. It’s electricity, manufacturing, cooling, and scale. Intelligence wants to run all the time. Whoever can power it cheapest and deploy it fastest wins. This reframes the AI race away from prompt engineering and toward infrastructure, energy, and industrial capacity. For founders, it means enormous opportunity outside “pure software”: energy, robotics, hardware, supply chains, industrial AI. For VCs, it means some of the biggest outcomes of this cycle won’t look like SaaS at all.

Fourth: abstraction levels are rising, and careers are thinning.

Satya is right that coding doesn’t disappear; it moves up a level. Karp is right that aptitude matters more than credentials. Elon is right that abundance removes necessity. Put together, the message is uncomfortable but clear: fewer people will be needed to produce the same output, but the people who remain will matter more. For founders, this means small teams can now attack problems that once required giants. For investors, it means team quality and adaptability matter more than headcount, pedigree, or story.

Fifth: diffusion, not invention, decides winners.

All three, in different ways, say the same thing: the value isn’t created by the people who invent the technology, but by the people who diffuse it into reality. Countries, companies, and sectors that actually use AI to change outcomes will outperform those who debate it, regulate it prematurely, or fetishize safety without deployment. For founders, this is permission to move faster than incumbents. For VCs, it’s a reminder that adoption curves beat technical elegance every time.

Finally: this is not a bubble problem, it’s a leadership problem.

If this were just about tech valuations, it would be a bubble. But what these talks show is something deeper: AI forces decisions. About work. About energy. About institutions. About truth. Companies and governments that avoid those decisions will fall behind quietly at first, then suddenly. The winners won’t be the loudest believers or skeptics. They’ll be the ones who redesign reality while everyone else argues about it.

Hope this was valuable!

If it was, please share with other investors & founders.

Cheers,

Guillermo

Frequently Asked Questions (FAQ)

What were the main AI takeaways from World Economic Forum in Davos?

The dominant theme was that AI has moved from experimentation to infrastructure. Leaders no longer discussed whether AI will matter, but how fast it will reshape companies, governments, and economies. The most important insights focused on speed of progress, institutional readiness, energy constraints, and execution—not on model demos.

What did Dario Amodei mean by “The Day After AGI”?

Dario Amodei described a practical engineering scenario rather than a philosophical AGI moment:

AI systems increasingly help build better AI systems. If this feedback loop becomes mostly autonomous, progress compounds rapidly. The key uncertainty is how much the physical world—chips, experiments, and real-world validation—slows the loop down.

Why did Demis Hassabis disagree with full AI self-improvement?

Demis Hassabis agreed that AI self-improvement is real, but argued that it breaks down outside of domains with easy verification. Coding and math scale well; biology, science, and real-world experimentation introduce friction. The disagreement is about speed, not capability.

Why does Satya Nadella say AI is a “platform shift”?

Satya Nadella argues AI is comparable to the PC, the internet, or the cloud. Platform shifts don’t just add efficiency—they restructure how work happens. AI digitizes reasoning itself, collapsing the distance between intent and execution and raising the abstraction level of most jobs.

What does “AI as a platform shift” mean in practical terms?

It means:

Reasoning becomes cheap and embedded everywhere

Workflows matter more than roles

Organizations flatten because information no longer needs to move up hierarchies

Advantage shifts from “who knows” to “who executes fastest”

AI doesn’t just improve tasks—it rewrites how companies are structured.

How will AI change organizational hierarchies?

When AI can instantly synthesize context, retrieve information, and explain decisions, middle layers designed to move information upward become less necessary. Fewer people can oversee more output. This doesn’t eliminate leadership—but it compresses organizational pyramids.

Why did Larry Fink emphasize AI’s economic impact?

Larry Fink focused on AI as a productivity and capital-allocation force. When reasoning costs approach zero, margins expand, throughput increases, and capital flows toward firms that redesign cost structures rather than merely adopting tools.

What does Alex Karp mean when he says AI exposes institutions that don’t work?

Alex Karp argues that AI doesn’t fix broken systems—it stress-tests them. If an organization relies on informal processes, unclear ownership, fragmented data, or heroic effort, AI will amplify the chaos rather than solve it.

Why does Palantir succeed in low-trust or adversarial environments?

Because it focuses less on “smarter models” and more on structure: ontology, permissions, auditability, and accountability. In messy real-world conditions (war, national security, critical infrastructure), that operational layer matters more than raw model intelligence.

What is Elon Musk’s core thesis on AI?

Elon Musk believes most AI debates focus on the wrong layer. The real bottleneck isn’t algorithms—it’s energy, manufacturing, and physical deployment. Intelligence is scaling faster than our ability to power it.

Why does Elon Musk think electricity will limit AI progress?

AI chips are compounding faster than grid capacity. Power generation and transmission grow slowly, while compute demand explodes. In Musk’s view, watts—not models—will cap near-term AI scaling.

Why does Elon Musk believe AI will become cheap and ubiquitous?

He expects a pattern where:

Closed models lead

Open models follow within ~1 year

Prices continuously fall

As marginal costs drop, AI stops being a moat. Competitive advantage shifts to what you do with intelligence, not access to it.

Why does Elon Musk think robots are the future of AI?

He frames the economy as:

(number of humanoid robots) × (productivity per robot)

In this model, abundance comes from physical execution, not digital tools. AI’s ultimate impact is not better software—but making labor optional for basic production.

Why did Elon Musk suggest space as a future location for AI data centers?

From an engineering perspective:

Solar power is constant in orbit

Cooling is easier via radiation

There are fewer land and grid constraints

If energy and cooling dominate AI costs, space becomes strategically interesting—even if timelines are optimistic.

Is AI safety discussed as slowing down development?

No. The dominant view among builders is engineering safety at the physical and institutional layer, not pausing progress. The emphasis is on control, reliability, and deployment discipline rather than halting capability gains.

What does all this mean for startup founders?

“We use AI” is no longer differentiation

The advantage lies in redesigning workflows, not adding tools

Small teams can now tackle problems that once required large organizations

Structural clarity, speed, and execution matter more than vision decks

What does this mean for VCs and investors?

The best AI companies may look boring, not flashy

Diligence should focus on institutional readiness, not demos

Some of the biggest outcomes will be in energy, hardware, robotics, and infrastructure—not SaaS alone

Adoption speed beats technical elegance

Is this AI cycle a bubble or something bigger?

It’s not a valuation problem—it’s a leadership problem. AI forces decisions about work, energy, institutions, and accountability. Those who avoid those decisions fall behind quietly, then suddenly.

What is the single unifying message from Davos on AI?

AI is no longer optional, experimental, or theoretical.

It is becoming infrastructure.

And infrastructure doesn’t ask for permission—it reshapes everything built on top of it.